10+2[1] 12Msc European Forestry

Marcos Rodrigues & Pere Gelabert

October 8, 2024

R is an object-oriented programming language and environment for statistical computing that provides relatively simple access to a wide variety of statistical techniques (R Core Team 2016). R offers a complete programming language with which to add new methods by defining functions or automating iterative processes.

Many statistical techniques, from the classic to the latest methodologies, are available in R, with the user in charge of locating the package that best suits their needs.

R can be considered as an integrated set of programs for data manipulation, calculation and graphics. Among other features R allows:

R is distributed as open source software, so obtaining it is completely free.

R is also multiplatform software which means it can be installed and used in various operating systems (OS), mainly Windows and Linux. However, the available functions and packages syntax is practically the same in any OS. From an operational point of view, R consists of a base system and additional packages that extend its functionality. Among the main types of packages we found:

The functions included in the packages installed by default, that is, those that are predefined in the basic installation R, are available for use at any time. However, in order to use the functions of new packages, specific calls must be made to those packages.

The installation of R depends on the operating system to be used. You can find all the necessary information in:

For the development of this course we will use the Windows version but feel free to use whatever version fits your needs. The last version of R is downloadable from here. We will install the latest version available. Remember that you have to install the version that corresponds to the architecture of your OS (32 or 64 bits). In case of doubt install both versions or at least the 32-bit version, which will always work on our computer.

Installation in Windows is very simple. Just run the executable (.exe) file and follow the installation steps (basically say Yes to everything). Once R is installed, we will install RStudio an integrated development environment (IDE) that is more user-friendly than the basic R interface. RStudio provides a more complete environment and some useful tools such as:

We will install the latest version of RStudio. You can see the installation steps in the installation video tutorial.

Being an open source software and with a strong collaborative component R has a large amount of resources and documentation relative to the specific syntax of the language itself (control structures, function creation, calls to objects …) and to every single package available as well.

On the other hand, R counts on a series of manuals which are available right after installing the software. You can find them in the installation directory of R (C:Files-X.X.X). These manuals and many others can also be downloaded from the R project website:

Finally, in addition to the wide repertoire of manuals available, there is also a wide range of resources and online help including:

It is relatively important to become familiar from the beginning with the various alternatives for getting help. A key part of your success in using R lies in your ability to be self-relient and be able to get help and apply it to your own problems.

R is basically a command line environment that allows the user to interact with the system to enter data, perform mathematical calculations or visualize results through plots and maps.

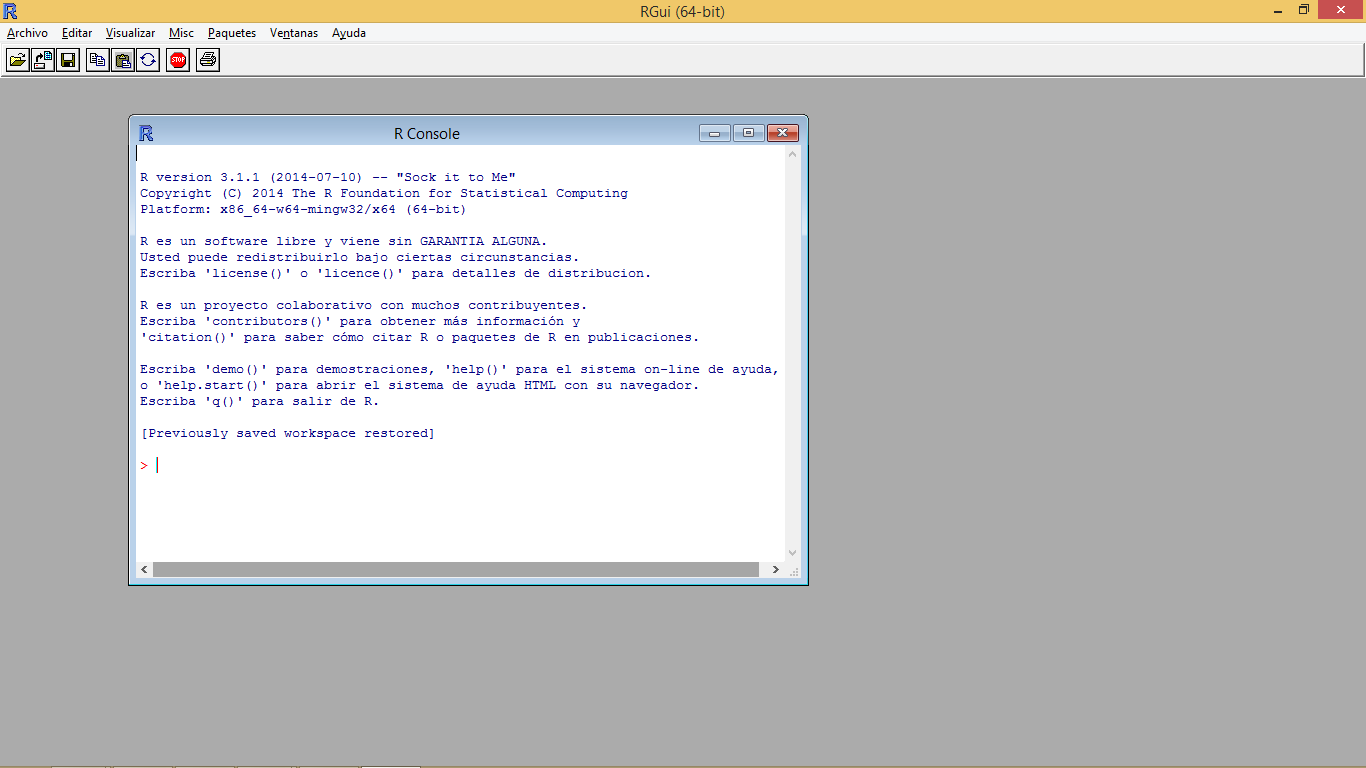

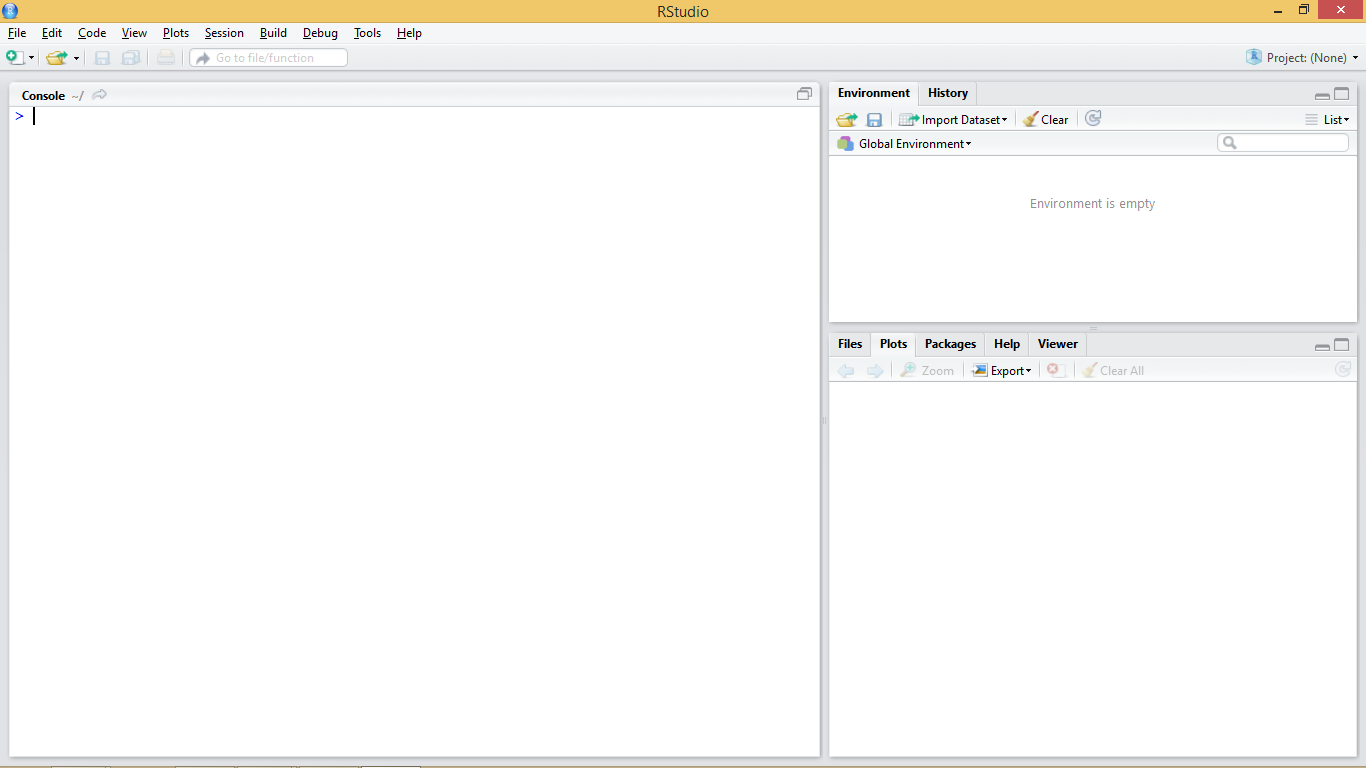

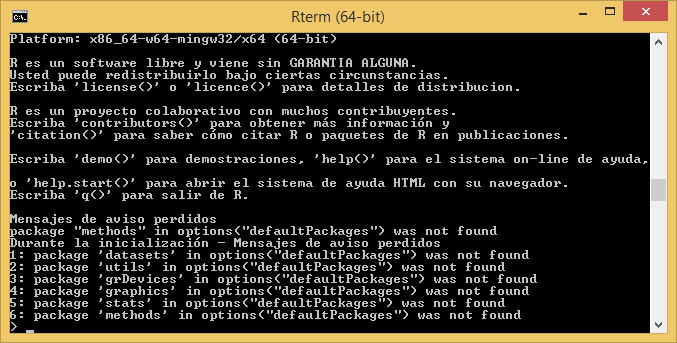

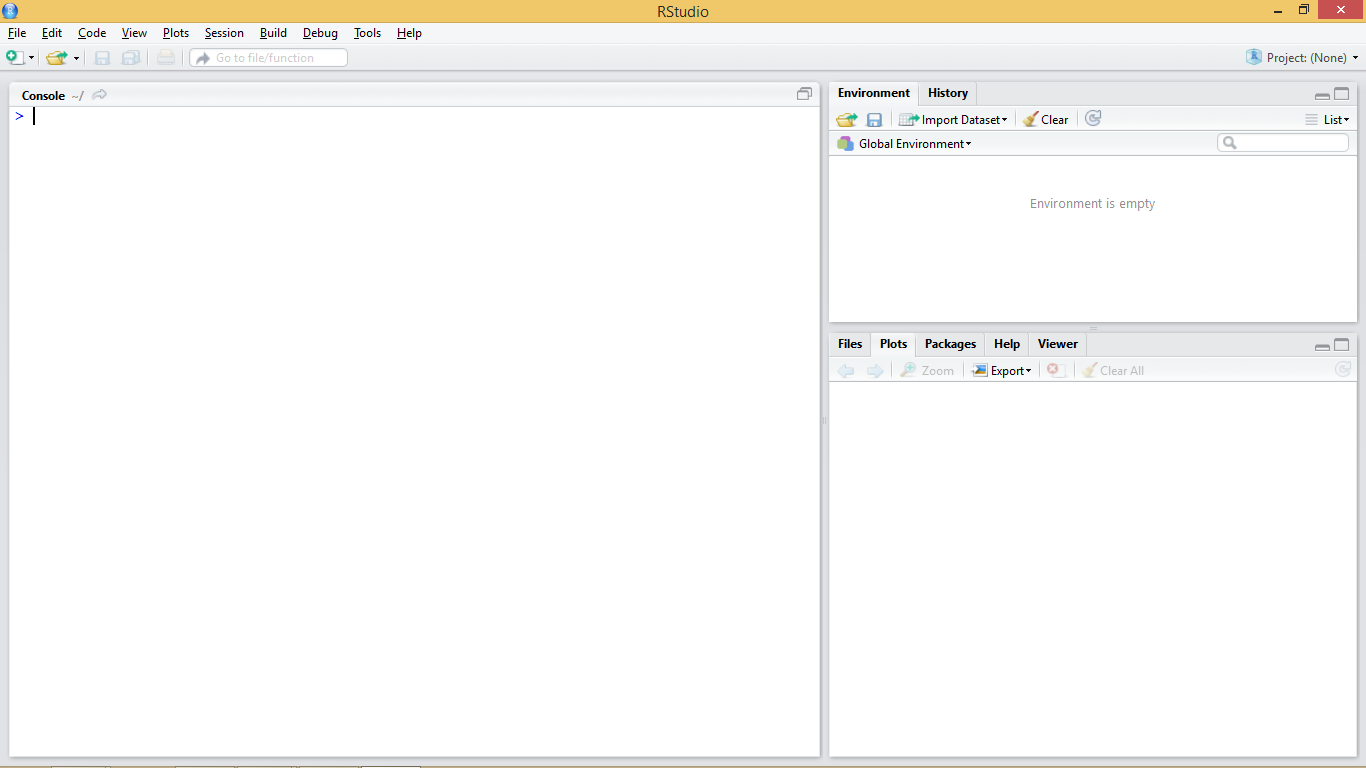

Figure 1.1 shows standard appearance of the R console, which can be considered as a windows cmd like terminal or console. We have also seen what the terminal and working environment looks like in RStudio at the end of the installation process (Figure 1.2). A third possibility is to work directly on the cmd terminal (Figure 1.3). The commands and instructions are the same regardless of the environment that we chose. In this course we will focus on the use of RStudio, since it is the simplest of them all.

The terminal (regardless of the chosen option) is usually the main working window and is where we will introduce the necessary instructions to carry out our operations. It is in this window where we will visualize the results from most instructions and objects we generate. An exception to this are plots and maps which are displayed in a specifically-devoted window located in the bottom-right corner.

Let’s get started and insert our first command in the R’s terminal. When R is ready or awaiting us to input an instruction the terminal shows a cursor right after a > symbol to indicate it.

At this point it is necessary to take into account that R is an interpreted language, which means that the different instructions or functions that we specify are read and executed one by one. The procedure is more or less as follows. We introduce an instruction in the R console, the application interprets and executes it, and finally generates or returns the result.

To better understand this concept we will do a little test using the R console as a calculator. Open the working environment of RStudio if you did not already open it and enter the following statement and press enter:

What just happened is that the R interpreter has read the instruction, in this case a simple arithmetic operation, executed it and returned the result. This is the basic way to proceed to enter operations. However, it will not be necessary for us to always enter the instructions manually. Later we will see how to create scripts or introduce blocks of instructions.

We have previously mentioned some features of R such as that R is an object-oriented language. But, what does this mean? It basically means that to perform any type of task we use objects. Everything in R is an object (functions, variables, results …). Thus, entities that are created and manipulated in R are called objects, including data, functions and other structures.

Objects are stored and characterized by their name and content. Depending on the type of object we create that object will have a given set of characteristics. Generally the first objects one creates are those of variable type, in which we will be able to store a piece of data and information. The main objects of variable type in R are:

Number: an integer or decimal number depending on whether we specify decimal figures.

Factor:a categorical variable or text.

Vector: a list of values of the same type.

Array: a vector of k dimensions.

Matrix: a particular case of array where k=2 (rows, cols).

Data.frame: table composed of vectors.

List: vector with values of different types.

Obviously there are other types of objects in R. For example, another object with which we are going to familiarize ourselves is the model objects. We can create them by storing the output of executing some kind of model like a linear regression model for instance. Spatial data also fits in its particular variety of objects. Through the course we will see both models and spatial information (vector and raster).

Objects in R are created by declaring a variable by specifying its name and then assign it a value using the <- operator. We can also use = but the <- operator is most commonly found in examples and manuals.

So, to create an object and assign it a value the basic instruction is composed of object name <- value.

Try to introduce the following instructions to create different kind of objects:

We can also store in an object the result form any operation:

So here is the thing. The type of object we create depends on the content that we assign. Therefor, if we assign a numeric value, we are creating an object of type number (integer or decimal) and if we assign a text string (any quoted text, either with single or double quotes), we are creating a text type object or string. Once created, the objects are visualized using calls using the name that we have assigned to the object. That is, we will write to the terminal in the name of the object and then its value will be shown.

Some considerations to keep in mind when creating objects or working with R in general lines:

R is case-sensitive so radio ≠ Radio

If a new value is assigned to an object it is overwritten and deletes the previous value.

Textual information (also known as string or char) is entered between quotation marks, either single ('') or double ("").

The function ls() will show us in the terminal the objects created so far.

If the value obtained from an instruction is not assigned in an object it is only displayed in the terminal, it is not stored.

One of the most common objects in R is the vector. A vector can store several values, which must necessarily be of the same type (all numbers, all text, and so forth). There are several ways to create vectors. Try entering the following instructions and viewing the created objects.

We have just covered the basic methods for vector creation. The most common approach is use the function c() which allow as to introduce values manually by separatting them using ,.

Another option that only works for vectors containing integer values is the use of : which produces a ordered sequence of numbers by adding 1 starting from the first value and finishing in the last.

Vectors, lists, arrays, and data frames are indexed objects. This means that they store several values and assign to each of them a numerical index that indicates their position within the object. We can access the information stored in each of the positions by using name[position]:

Note that opposite to most of the other programming languages, the index for the first position in an indexed object is 1, whereas Python, C++ and others use 0.

As with an unindexed object, it is possible to modify the information of a particular position using the combination name[position] and the assignment operator <-. For example:

Let’s see some specific functions and basic operations for vectors and other indexed objects:

length(vector): Returns the number of positions of a vector.

Logical operators <,>, ==,!=: Applying these operators on a vector returns a new vector with values TRUE/FALSE for each of the positions of the vector, depending on whether the given values satisfies or not the condition.

Once we have seen vectors we go to explore how objects of type list work. A list is an object similar to a vector with the difference that lists allow to store values of different type. Lists are created using the list(value1, value2, ...) function. For example:

To access the values stored in the different positions proceed in the same way we did with vectors, ie name[position]:

We can use the length() function with list too:

Arrays are an extension of vectors, which add additional dimensions to store information. The most common case is the 2-dimensional matrix (rows and columns). To create an array, we use array(values, dimensions). Both values and dimensions are specified using vectors. In the following example we see how to create a matrix with 4 rows and 5 columns, thus containing 20 values, in this case correlative numbers from 1 to 20:

[,1] [,2] [,3] [,4] [,5]

[1,] 1 5 9 13 17

[2,] 2 6 10 14 18

[3,] 3 7 11 15 19

[4,] 4 8 12 16 20To access the stored values we will use a combination of row-and-column positions like matrix[row, col], where row indicates the row postition and col the column one. If we only assign value to one of the coordinates ([row,] or [,col]) we get the vector corresponding to the specified row or column.

A data frame is used for storing data tables. It is a list of vectors of equal length. For example, the following variable df is a data frame containing three vectors n, s, b.

As you can see, the function data.frame() is used to create the data.frame. However, we will seldom use these function to create objects or store data. Normally, we will call an instruction to read text files containing data or call data objects available in some packages.

We have seen so far aspects related to the creation of objects. However we should be also know how many objects we have created in our session and how to remove them if necessary. To display all created objects we use ls(). Deleting objects in R is done by the command remove rm(object) and then call to the garbage collector with gc() to free-up the occupied memory.

If we want to removal all the objects we have currently in our working session we can pass a list object containing the names of all objects to the gc() function. If you are thinking on combining gc()and ls()you are right. This would be the way:

Up to this point we have seen and executed some instructions in R, generally oriented to the creation of objects or realization of simple arithmetic operations.

However, we have also executed some function-type statements, such as the length() function. A function can be defined as a group of instructions that takes an input, uses this input to compute other values and returns a result or product. We will not go into very deep details, at least for now. It suffices to know that to execute a function it is enough to invoke the instruction that calls the desired function (length) and to specify the necessary inputs, also known as arguments. These inputs are always included between the parentheses of the instruction (length(vector)). If several arguments are needed we separate them using ,.

Sometimes we can refer to a given argument by using the argument’s name as is the case of the example we saw to delete all the objects in a session rm(list=ls()).

So far we have inserted instructions in the console but this is not the most efficient way to work. We will focus on the use of scripts which are an ordered set of instructions. This means we can write a text file with the instructions we want to insert and then run them at once.

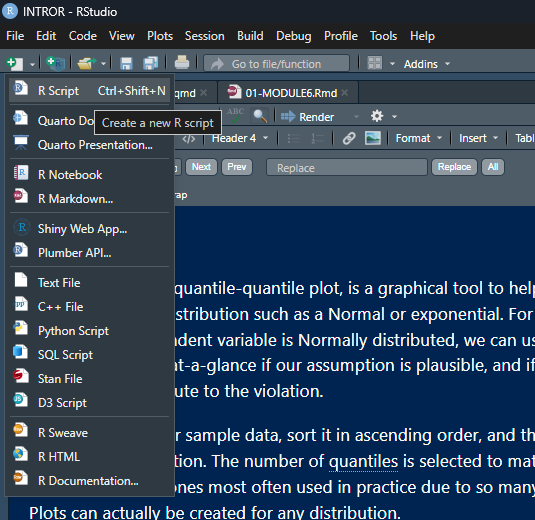

RStudio has an script development environment which opens in the top-left window. We can access the scripting window pressing File/New File/R script.

For additional information visit the RStudio support site.

So far we have seen how to enter data and create objects manually, but it is also possible, and in fact is most common, to read data from files and store it in an object. If the target data file is properly structured, we will create a matrix or a ‘data.frame’ object which we can manipulated afterwards.

Using data files normally requires us to specify the location of that file using paths. To avoid this, R has a tool that allow us to specify a target folder -the so-called working directory or folder- to work with.

The working directory is the default path for reading and writing files of any kind. We can know the path to the current working directory using the getwd() command. To set a new working directory, we use the command setwd("path"). Remember that setwd() requires a string argument (whereas getwd() does not) to specify the path to the working directory (“path”).

By default, in Windows the working directory is set to the Documents folder.

Once the working directory is specified, everything we do in R (read files, export tables and/or graphics …) will be done in that directory. However, it is possible to work with other file system locations, specifying a different path through the arguments of some functions.

R allows you to read any type of file in ASCII format (text files). The most frequently used functions are:

read.table() and its different variations

scan()

read.fwf()

For the development of this course we will focus on using the read.table() function as it is quite versatile and easy to use. Before starting to use a new function we should always take a look at the available documentation. we will take this opportunity to show you how to do this in R so that you begin to become familiar R help.

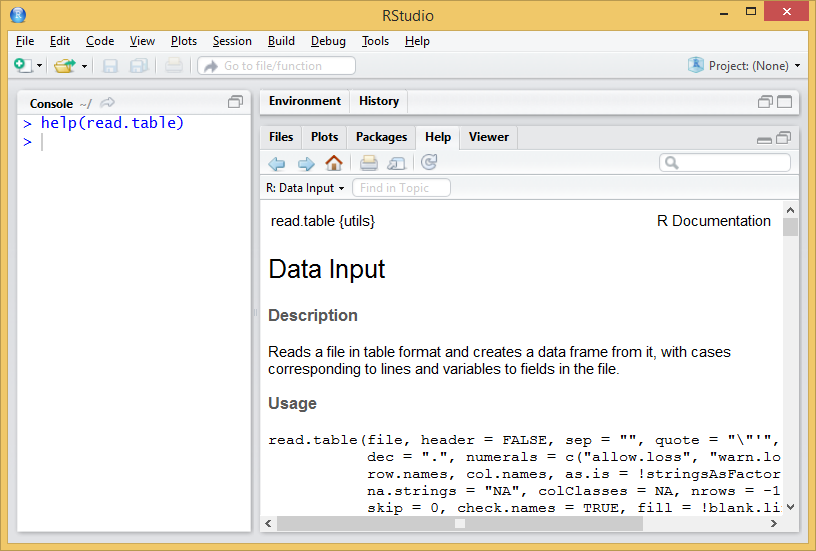

Any available function in R, regardless of being a standard one or belonging to an imported package has a documentation entry in the R help. R help describes us in detail the use of any function, providing information of the different arguments of the function, argument types, defaults, reference to the method (when applies) and even short code examples. To access the help we will use the help() function as follows:

Entering the help() function we access the manual. In this case we can see a brief description of the function read.table() and its different variants (read.csv(), …). Below is the description of the arguments of the function, followed by some examples of application. This is the usual procedure for all functions available in R (Figure 1.5).

In case we use the regular R interface or the cmd terminal, the help entry will open in our default web browser.

At first glance the read.table() function seems to require many arguments, while its variations seem simpler. As already mentioned, arguments are just parameters that we can specify or change to execute a function, thus tunning the operation of the functions and the result to be obtained.

Arguments are specified within the function separated by commas (“,”). However, it is not necessary to assign a value to each one of them, since in the case of omitting an argument it is assigned a default value (always reported in the help entry). In the case of read.table() versus other functions like read.csv() the main advantage of using the first is that we can manipulate any argument, whereas most of them are fixed in the later. read.csv() is desigend to open comma separate files following the north-american standard (, as field delimiter and . as decimal separator). On the other hand, read.table() can potentially open any text file regardless of the separator, encoding, decimal format and so on so forth. Most of the executions of read.table() consist of:

💡read.table(file,header=TRUE, sep=“,”,dec=“.”)

file: path and name of the file to open.

header: TRUE/FALSE argument to determine whether the first row of the data file contains column names.

sep: field or column separator4.

dec: decimal separator.

Let’s see an example of reading file. We will read the file coordinates.txt, located in the Data directory. The file is structured in 3 columns with heading and separated by “;”. Al data are integer so no decimal separator is needed. See Table 2.2.

| FID_1 | X_INDEX | Y_INDEX |

|---|---|---|

| 364011 | 82500 | 4653500 |

| 371655 | 110500 | 4661500 |

| 487720 | 55500 | 4805500 |

| 474504 | 28500 | 4783500 |

| 436415 | 85500 | 4729500 |

| 457549 | 38500 | 4757500 |

| 469377 | 39500 | 4775500 |

| 397162 | 124500 | 4687500 |

| 434666 | 41500 | 4727500 |

| 478383 | 49500 | 4789500 |

| 488973 | 329500 | 4807500 |

| 394153 | 77500 | 4684500 |

| 426962 | 36500 | 4718500 |

| 362216 | 148500 | 4651500 |

The process to follow is:

Set working directory.

Use the function to save the data in an object.

If we do…

…as we have not specified any object in which to store the result of the function, the contents of the file are simply printed on the terminal. This is a usual mistake, don’t worry. To store and later access the contents of the file we will do the following:

FID_1 X_INDEX Y_INDEX

1 364011 82500 4653500

2 371655 110500 4661500

3 487720 55500 4805500

4 474504 28500 4783500

5 436415 85500 4729500

6 457549 38500 4757500

7 469377 39500 4775500

8 397162 124500 4687500

9 434666 41500 4727500

10 478383 49500 4789500

11 488973 329500 4807500

12 394153 77500 4684500

13 426962 36500 4718500

14 362216 148500 4651500Please note that:

You have to specify your own working directory.

The path to the directory is specified in text format, so you type “in quotation marks”.

The name of the file to be read is also specified as text.

The header argument only accepts TRUE or FALSE values.

The sep argument also requires text values to enter the separator5.

It is advisable to save the data an object (table <-).

The result of read.table() is an array stored in an object named table. Since read.table() returns an array we can manipulate our table using the same procedure described in the @ref(arrays) section.

[1] 364011 371655 487720 474504 436415 457549 469377 397162 434666 478383

[11] 488973 394153 426962 362216[1] 364011Below we found some interesting functions to preview and verify the information, and to know the structure of the data we have just imported. These functions are normally used to take a quick look into the first and last rows of an array or data.frame object and also to describe the structure of a given object.

head(): displays the first rows of the array.

tail(): displays the last rows of the array.

str(): displays the structure and data type (factor or number).

FID_1 X_INDEX Y_INDEX

1 364011 82500 4653500

2 371655 110500 4661500

3 487720 55500 4805500

4 474504 28500 4783500

5 436415 85500 4729500

6 457549 38500 4757500 FID_1 X_INDEX Y_INDEX

9 434666 41500 4727500

10 478383 49500 4789500

11 488973 329500 4807500

12 394153 77500 4684500

13 426962 36500 4718500

14 362216 148500 4651500'data.frame': 14 obs. of 3 variables:

$ FID_1 : int 364011 371655 487720 474504 436415 457549 469377 397162 434666 478383 ...

$ X_INDEX: int 82500 110500 55500 28500 85500 38500 39500 124500 41500 49500 ...

$ Y_INDEX: int 4653500 4661500 4805500 4783500 4729500 4757500 4775500 4687500 4727500 4789500 ...As already mentioned, read.table() is adequate to start reading of files to incorporate data into our working session in R. In any case we must be aware that there are other possibilities such as the read.csv() and read.csv2() that we have already seen when accessing the read.table() description. These functions are variations that defaults some arguments such as the field separator (columns) or the decimal character. In the help of the function you have information about it.

Of course, we can also write text files from our data. The procedure is quite similar to read data but using write.table() instead of read.table. Remember that the created files are saved into the working directory, unless you specify an alternative path in the function arguments. As always, before starting the first thing is to consult the help of the function.

The arguments of the function are similar to those already seen in read.table ():

💡write.table(object,file,names,sep)

object: object of type matrix (or dataframe) to write.

file: name and path to the created file (in text format).

row.names: add or not (TRUE or FALSE) queue names. FALSE is recommended.

sep: column separator (in text format).

dec: decimal separator (in text format).

Try the following instructions6 and observe the different results:

Let’s see the most common instructions for manipulating and extracting information in R. Specifically we will see how to extract subsets of data from objects of type vector, array or dataframe. We will also see how to create new data sets from the aggregation of several objects. There are many commands that allow us to manipulate our data in R. Many things can be understood as manipulation but for the moment we will focus on:

Select or extract information

Sort tables

Add rows or columns to a table

As you might already guess, we will work with tabular data like arrays and data.frames which we further refer to as tables.

The first thing we will do is access the information stored in the column(s) of a given table object. There are two basic ways to do this:

Using the position index of the column.

Using the name (header) of the column.

These two basic forms are not always interchangeable, so we will use one or the other depending on the case. It is recommended that you use the one that feels most comfortable for you. However, in most examples the column position index is used since it is a numerical value that is very easily integrated with loops and other iterative processes.

To extract columns using the position index we will use a series of instructions similar to those already seen in extracting information from Arrays, Vectors and Lists. The following statement returns the information of the second column of the array object table and stores it in a new object that we called col2:

[1] 82500 110500 55500 28500 85500 38500 39500 124500 41500 49500

[11] 329500 77500 36500 148500It is also possible to extract a range of columns, proceeding in a similar way to what has already been seen in the creation of vectors. The following statement extracts columns 2 and 3 from the table object and stores them in a new object called cols:

X_INDEX Y_INDEX

1 82500 4653500

2 110500 4661500

3 55500 4805500

4 28500 4783500

5 85500 4729500

6 38500 4757500

7 39500 4775500

8 124500 4687500

9 41500 4727500

10 49500 4789500

11 329500 4807500

12 77500 4684500

13 36500 4718500

14 148500 4651500Now let’s see how to select columns using their name. Name extraction is performed using a combination of object and column name object using $ to separte object from column name. The following statement selects the column named Y_INDEX from the array object table and stores it in col.Y_INDEX:

[1] 4653500 4661500 4805500 4783500 4729500 4757500 4775500 4687500 4727500

[10] 4789500 4807500 4684500 4718500 4651500A key piece of information here is the name of the column which we need to know in advance. Well, we can check the original text file or inspect the object table using str(). We can also take look to the top-right window activaing the Environment sub-window and unwrap table but be aware this can be only accessed using RStudio.

The main difference between these two methods is that index selection makes it possible to extract column ranges easily. To do this using the name of the columns you have to use functions like subset():

Using the argument select we can point the columns that we want to extract using a vector with column names. Using subset() it is also possible to specify the columns that we do NOT want to extract. To do this proceed as follows:

In this way we would only extract the first column, excluding X_INDEX and Y_INDEX. Of cours, we can do this using the column index as well:

The main reason why we are learning how to manipulate table columns is to be able to prepare our data for other purposes. It may be the case we need to join tables or columns that proceed from the same original table. The instruction cbind() allow us to merge together several tables and/or vectors provided they have the same number of rows. We can merge as many objects as we want to, by separating them using ,:

col2 cols2

[1,] 82500 364011

[2,] 110500 371655

[3,] 55500 487720

[4,] 28500 474504

[5,] 85500 436415

[6,] 38500 457549

[7,] 39500 469377

[8,] 124500 397162

[9,] 41500 434666

[10,] 49500 478383

[11,] 329500 488973

[12,] 77500 394153

[13,] 36500 426962

[14,] 148500 362216It is often the case we need to alter or change the name of a table object. If we wanted to rename all the columns of an object we would to pass a vector with names to the function colnames() in case we are renaming an array or names() if we are dealing with a data.frame. Note that the vector should have the same length as the total number of columns. Lets rename our table cols3:

What if we want to change only a given name. Then we just point to the column header using the position index like this:

You may be wondering How can we know what kind of object is my table?. That is a very good question. Specially at the begining is quite difficult to be in control this stuff. If you use RStudio you already see a description of the objects in the top-right window. array or matrix objects show something like [1:14,1:2] indicating multiple dimensions, vectors are similar but with only 1 dimension [1:14] and data.frames show the word data.frame in their description. However, this is not the fancy way to deal with object types. Just for the record, when we mean type an actual code developer means class. Of course there is a function called class() that returns the class an object belongs to:

Finally, let’s look at how to sort columns and arrays. To sort a column in R, the sort() function is used. The general function of the function is:

[1] 28500 36500 38500 39500 41500 49500 55500 77500 82500 85500

[11] 110500 124500 148500 329500If we want to reorder an array based on the values of one of its columns, we will use the order () function. The general operation of the function is:

FID_1 X_INDEX Y_INDEX

4 474504 28500 4783500

13 426962 36500 4718500

6 457549 38500 4757500

7 469377 39500 4775500

9 434666 41500 4727500

10 478383 49500 4789500

3 487720 55500 4805500

12 394153 77500 4684500

1 364011 82500 4653500

5 436415 85500 4729500

2 371655 110500 4661500

8 397162 124500 4687500

14 362216 148500 4651500

11 488973 329500 4807500To be honest, in this last example we are actually working with rows. Take a look at the position of the ,. The brackets are also something that we will use later to extract data from a table. But it feels right to bring here the order() command right after sort().

Let us now turn to the manipulation of rows. The procedure is basically the same as in the case of columns, except for the fact that we normally do not work with names assigned to rows (although that’s a possibility), but we refer to a row using its position. To extract rows or combine several objects according to their rows we use the following expressions:

Same as with Arrays we point to rows instead of columns when we use the index value to the left of the [row,col]. So that’s the thing, we just change that and we are dealing with rows. We can join rows and tables using the rbind() function rather than cbind(). r stands for row and c for column.

We can extract a subsample of rows that meet a given criteria:

💡table[criteria,]

FID_1 X_INDEX Y_INDEX

1 364011 82500 4653500 FID_1 X_INDEX Y_INDEX

2 371655 110500 4661500

5 436415 85500 4729500

8 397162 124500 4687500

11 488973 329500 4807500

14 362216 148500 4651500Oh, we expect you to have found out this by yourself but evidently we can combine row and column manipulation if that fits our purpose.

There are a large number of functions in the basic installation of R. It would be practically impossible to see all of them so we will see some of the most used, although we must remember that not only is there the possibility of using predesigned functions, but R also offers the possibility to create your own functions.

Below are some of the basic statistical functions that we can find in R. These functions are generally applied matrix-array or data.frame data objects. Some of them can be applied to the whole table and others to single columns or rows.

sum() Add values.

max() Returns the maximum value.

min() Returns the minimum value.

mean() Calculates the mean.

median() Returns the median.

sd() Calculates the standard deviation.

summary() Returns a statistical summary of the columns.

We are going to apply them using some example data. We will use the data stored in the file fires.csv, inside the Data folder (Table 3.1). This file contains data on the annual number of fires between 1985 and 2009 in several European countries.

fires <- read.csv2("./data/Module_1/fires.csv", header = TRUE)

knitr::kable(coords, caption = "Structure of the fires.csv file.")| FID_1 | X_INDEX | Y_INDEX |

|---|---|---|

| 364011 | 82500 | 4653500 |

| 371655 | 110500 | 4661500 |

| 487720 | 55500 | 4805500 |

| 474504 | 28500 | 4783500 |

| 436415 | 85500 | 4729500 |

| 457549 | 38500 | 4757500 |

| 469377 | 39500 | 4775500 |

| 397162 | 124500 | 4687500 |

| 434666 | 41500 | 4727500 |

| 478383 | 49500 | 4789500 |

| 488973 | 329500 | 4807500 |

| 394153 | 77500 | 4684500 |

| 426962 | 36500 | 4718500 |

| 362216 | 148500 | 4651500 |

The first thing is to import the file into atable.

Once data is imported we can take a look at the structure to make sure that everything went well. We should have integer values for each region:

'data.frame': 25 obs. of 7 variables:

$ YEAR : int 1985 1986 1987 1988 1989 1990 1991 1992 1993 1994 ...

$ PORTUGAL: int 8441 5036 7705 6131 21896 10745 14327 14954 16101 19983 ...

$ SPAIN : int 12238 7570 8679 9247 20811 12913 13531 15955 14254 19263 ...

$ FRANCE : int 6249 4353 3043 2837 6763 5881 3888 4002 4769 4618 ...

$ ITALY : int 18664 9398 11972 13588 9669 14477 11965 14641 14412 11588 ...

$ GREECE : int 1442 1082 1266 1898 1284 1322 858 2582 2406 1763 ...

$ EUMED : int 47034 27439 32665 33701 60423 45338 44569 52134 51942 57215 ...Now we will calculate some descriptive statistics for each column to have a first approximation to the distribution of our data. To do this we will use the function summary() that will return some basic statistical values such as:

Quantile

Mean

Median

Maximum

Minimum

YEAR PORTUGAL SPAIN FRANCE ITALY

Min. :1985 Min. : 5036 Min. : 7570 Min. :2781 Min. : 4601

1st Qu.:1991 1st Qu.:14327 1st Qu.:12913 1st Qu.:4002 1st Qu.: 7134

Median :1997 Median :21870 Median :16771 Median :4618 Median : 9540

Mean :1997 Mean :20848 Mean :16937 Mean :4907 Mean : 9901

3rd Qu.:2003 3rd Qu.:26488 3rd Qu.:20811 3rd Qu.:6249 3rd Qu.:11965

Max. :2009 Max. :35697 Max. :25827 Max. :8005 Max. :18664

GREECE EUMED

Min. : 858 Min. :27439

1st Qu.:1322 1st Qu.:45623

Median :1486 Median :55215

Mean :1656 Mean :54249

3rd Qu.:1898 3rd Qu.:62399

Max. :2582 Max. :75382 The summary() function is not only used to obtain summaries of data through descriptive statistics, but can also be used in model type objects to obtain a statistical summary of the results, coefficients, significance … Later we will see an example of this applied on a linear regression model.

Let’s now see what happens if we apply some of the functions presented above. Try running the following instructions:

As can be seen these 3 instructions work with a tablebut mean(), median() and sd() will not. We have to apply them to a single column:

So far we have seen how to apply some of the basic statistical functions to our data, applying those functions to the data contained in the matrix or some of its columns. In the case of columns we have manually specified which one to apply a function. However, we can apply functions to all elements of an array (columns or rows) using iteration functions.

The apply() function allows you to apply a function to all elements of a table. We can apply some of the functions before the rows or columns of an array. There are different variants of this function. First, as always, invoke the help of the function to make sure what we are doing.

According to the specified in the function help we can see that the apply () function works as follows:

💡apply(x, margin, fun, …)

Where:

X: data matrix.

MARGIN: argument to specify whether the function is applied to rows (1) or columns (2).

FUN: function to be applied (mean,sum…)

For example, if we want to sum the values of each country/region we will do as follows:

YEAR PORTUGAL SPAIN FRANCE ITALY GREECE EUMED

1997.00 20848.20 16937.24 4907.16 9900.64 1655.80 54249.04 Well, almost there. We have included the YEAR column which is the first one:

PORTUGAL SPAIN FRANCE ITALY GREECE EUMED

20848.20 16937.24 4907.16 9900.64 1655.80 54249.04 📝EXERCISE 1: Calculate the total number of fires on a yearly basis.

Deliverable:

Submit the commented code following the exercises portfolio template example and write a text file (.txt) with the result.

📝EXERCISE 2: Open the file “barea.csv” (file containing Burned Area by year and country) and save it in an object named “barea” - Select and store in a new matrix the data from the year 2000 to 2009. - Calculate descriptive statistics 2000-2009. - Calculate the mean, standard deviation, minimum and maximum of all columns and save it to a new object.

Deliverable:

Submit the commented code following the exercises portfolio template example and write a text file (.txt) with the result.

🪄EXERCISE 2 HINT:

Use the apply function to calculate each statistic, separately.

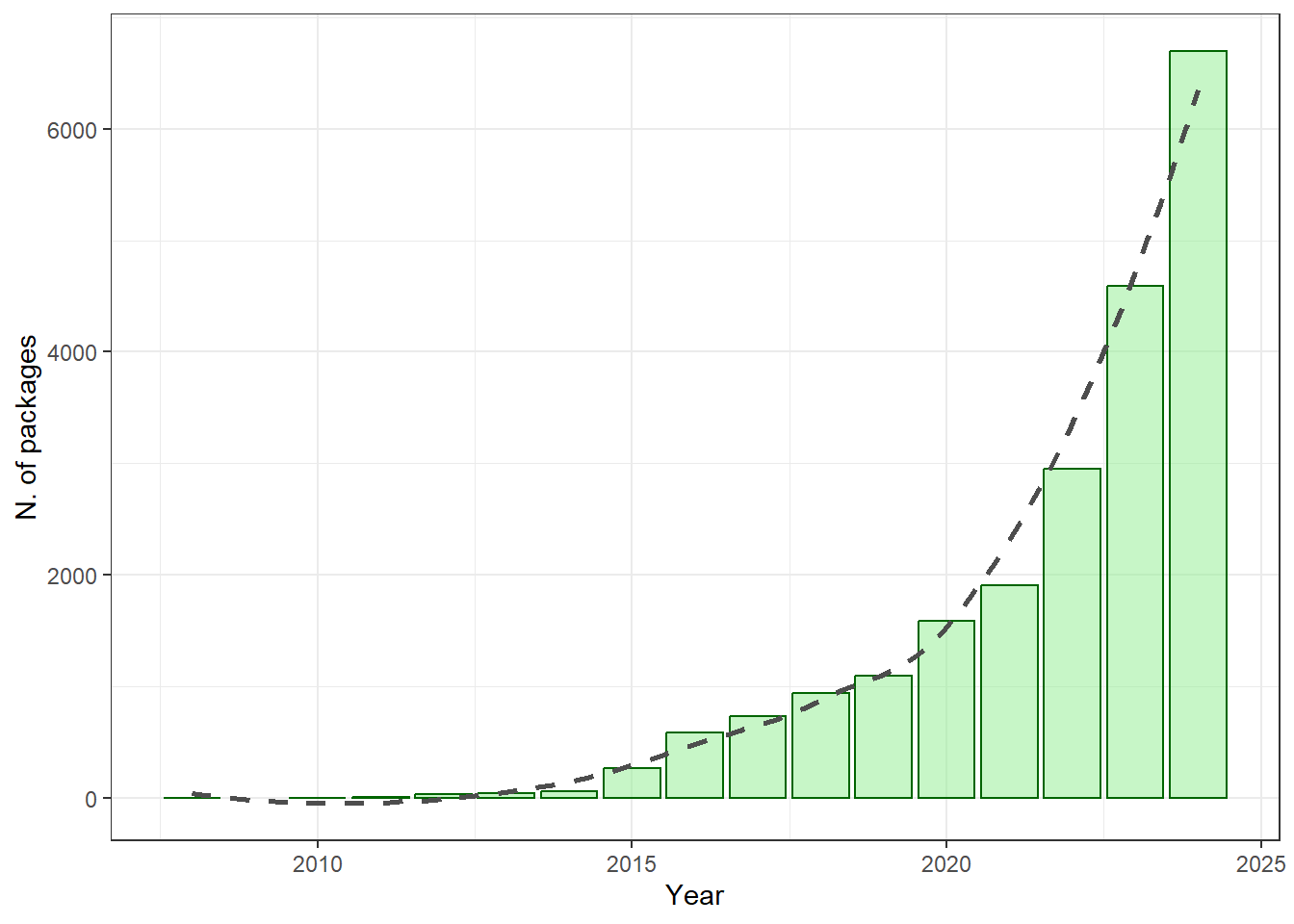

We have already seen how some of the basic functions of R. work. However, we have the possibility of extending the functionalities of R by importing new packages into our environment. These packages are developed by different research groups and/or individuals.

There are currently over 20015 packages available in the R project repository (CRAN). Obviously we do not need to know how each one of them works, but only focus on those that fit our needs.

The import and installation of new packages is carried out in 2 stages:

obtaining and installing and internal call to the package.

Loading the package into our session.

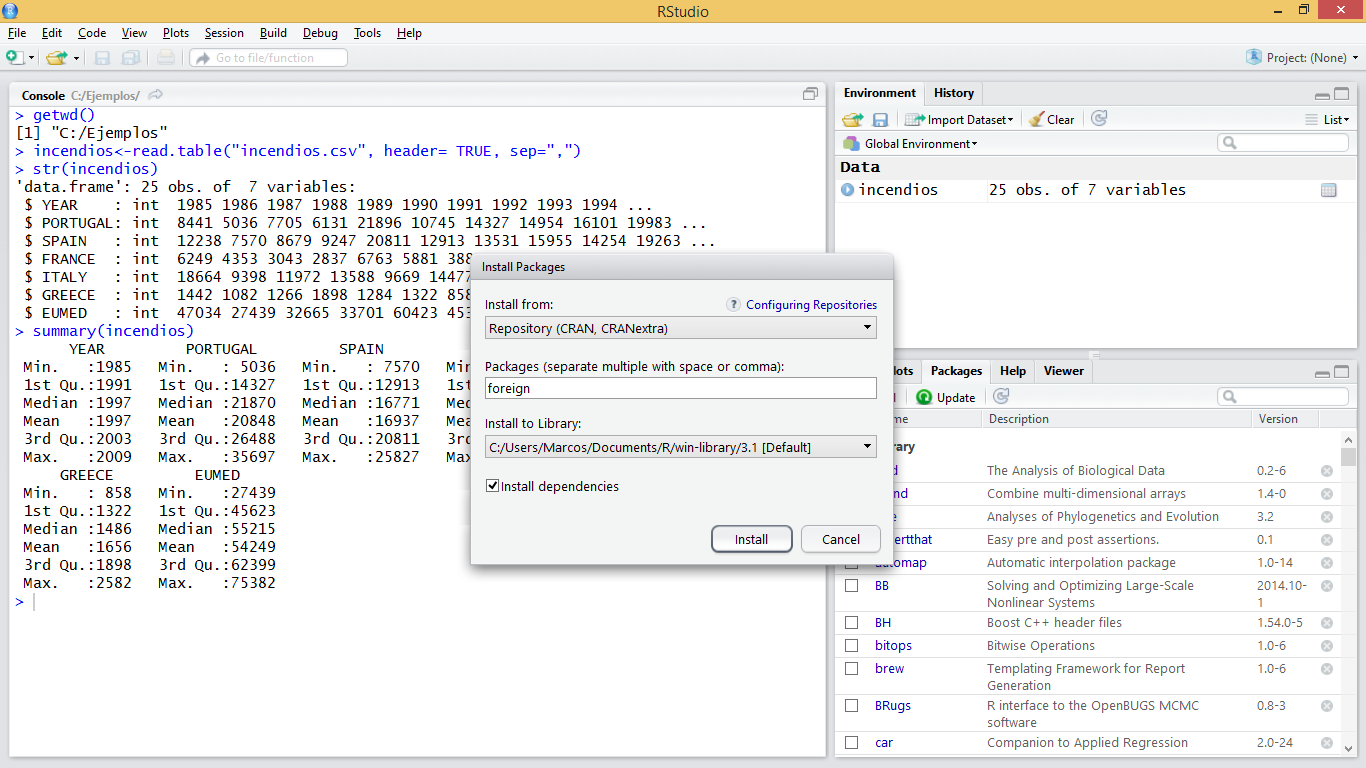

We will install the “foreign” package. This package provides functions for reading and writing data stored in different statistical software formats such as Minitab, S, SAS, SPSS, Stata, Systat … and to read and write dBase files such as attribute tables of vector layers in format shapefile. The first thing we will do is get the package via download. This can be done from the menu of R:

Packages / Install packages …

Select download directory (mirror)

Search for “foreing”

If you are using RStudio simply go to the Packages tab in the lower right box and select the Install option. In the popup window select the Repository (CRAN, CRANextra) option in the drop-down menu and type the name of the package.

Another option is to obtain the package in .zip format directly from the webpage of project R and use the function Install package (s) from local zip files … (or Package archives in RStudio).

It is also possible to install packages through instructions in the R window, which is the most recommended method7:

At this point we would have installed the package in our personal library of R. However, in order to use the functions of the new package in our R environment it is necessary to make an internal call to the package. This is generally done using thelibrary()function8:

Once this is done we have all the functions of the package ready to be used. All that is left is to learn how to use the functions of the package… which is easy to say but maybe not to do.

So far we have mainly seen how to use pre-designed functions either in the default installation or from other packages. However, there is the possibility of creating our own functions.

A function is a group of instructions that takes an input or input data, and uses them to calculate other values, returning a result or product. For example, the mean() function takes as input a vector and returns as a result a numeric value that corresponds to the arithmetic mean.

To create our own functions we will use the object called function that constitute new functions. The usual syntax is:

💡FunName <- function(args){comands}

Where:

arguments are the arguments we want to pass to our function.

commands are the instructions needed to do whatever the function does.

Let’s look at a simple example. We will create a function to calculate the standard deviation of a vector with numerical data. The standard deviation formula looks like this:

Which is essentially the square root (sqrt()) of the variance (var()):

The function name is desv(). This function requires a single argument (x) to be executed. Once the function is defined, it can be called and used as any other predefined function in the system.

Let’s see an example with 2 arguments. We will create a function to calculate the NDVI. The function will take as arguments two objects of vector type corresponding to the sensor Landsat TM channels 3 and 4:

The normalized difference vegetation index (NDVI) is a simple graphical indicator that can be used to analyze remote sensing measurements, typically but not necessarily from a space platform, and assess whether the target being observed contains live green vegetation or not wikipedia.org.

Where

As we have said, once created we can use our functions in the same way as the rest of functions. This includes using the apply() function and its derived versions to iterate over rows and columns of an array or data frame.

Let’s see an example with the function desv(), previously created applied to the data of number of fires:

R is not just an environment for the implementation and use of functions for statistical calculation but it is also a powerful environment for generating and displaying plots. Creating plots is besides an effective and quick way to visualize our data. By doing so we can verify whether data was correctly imported or not. However, creating graphics is also done by command-line instructions, which can sometimes be a bit tricky, especially at the beginning.

In R we can create many types of plots. With some packages it is also possible to generate maps similar to those created by GIS, although for the moment we will only see some basic types such as:

Dot charts

Line charts

Barplots

Histograms

Scatterplots

Before going into detail with the specific types of graphics we will see some general concepts which apply to the majority of plots:

All graphics always require an object that contains the data to be drawn. This object is usually specified in the first argument of the function corresponding to each type of chart.

There are a number of arguments to manipulate axis labels or the chart title:

main: text with the title of our plot.

xlab: text for x axis label.

ylab: text for y axis label.

xlim: vector with upper and low range for the x-axis.

ylim: vector with upper and low range for the y-axis.

cex: number indicating the aspect ratio between plot elements and text. 1 by default.

col: changes de color the plotted element. See http://www.stat.columbia.edu/~tzheng/files/Rcolor.pdf

legend(): adds a legend element describing symbology.

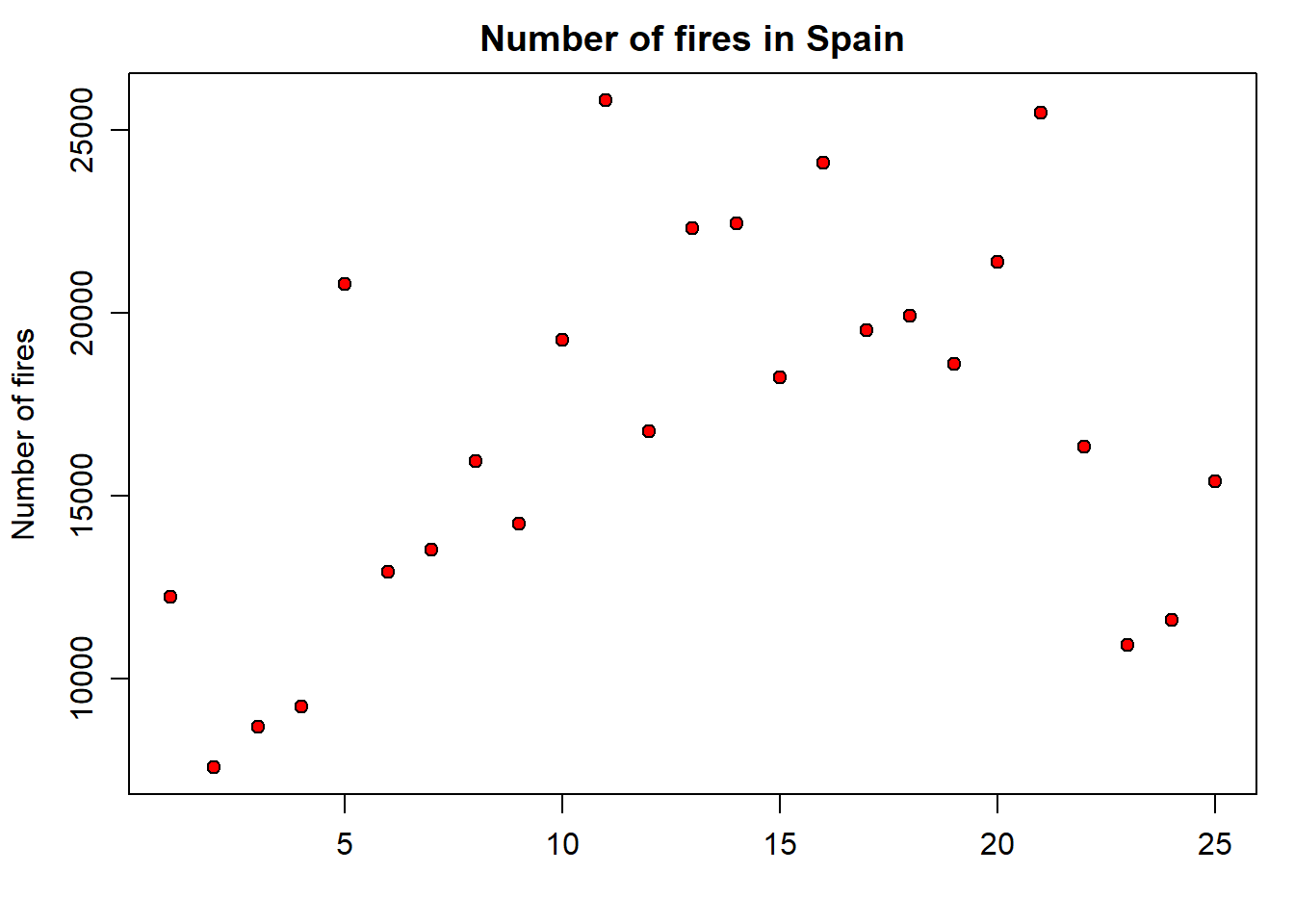

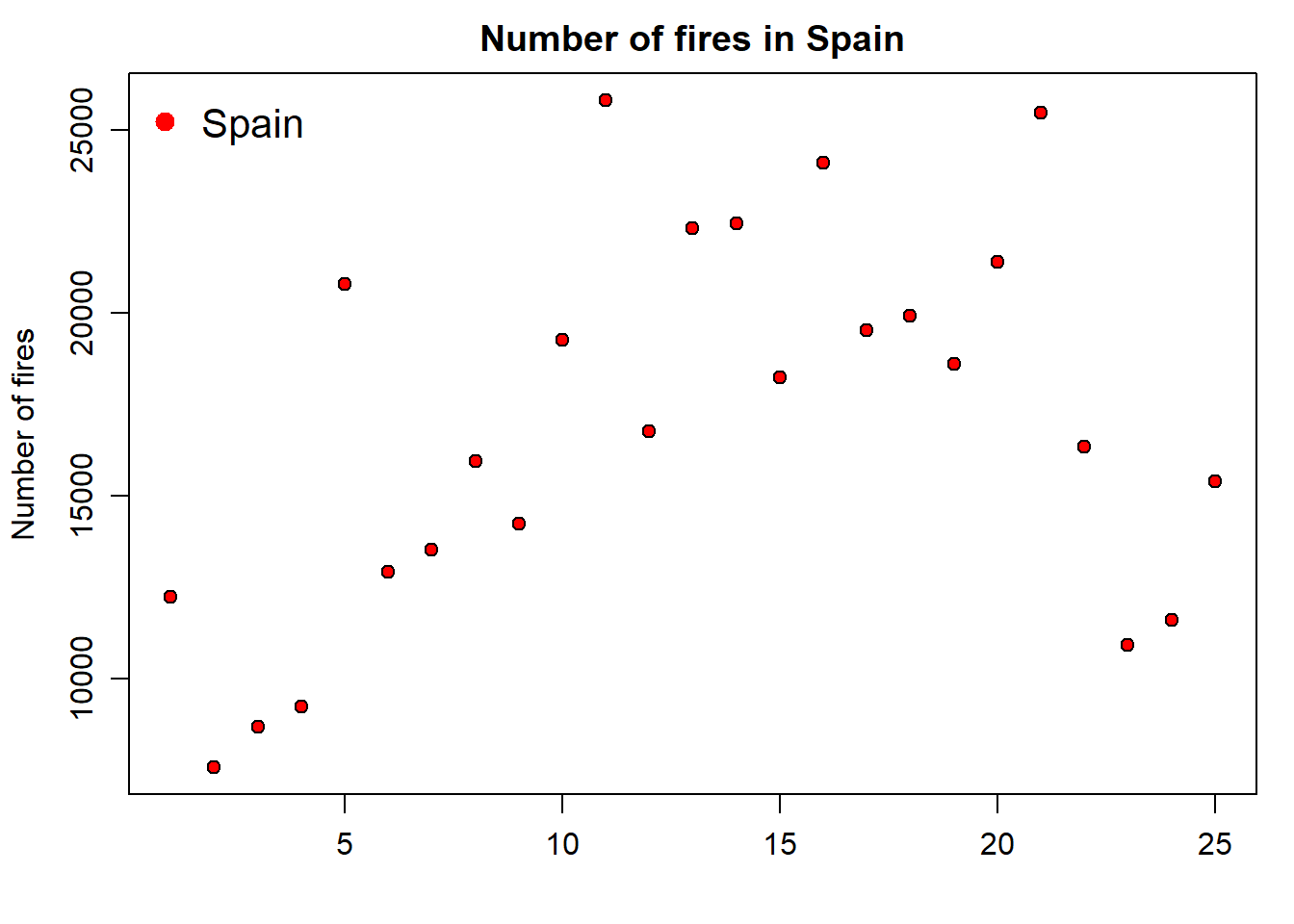

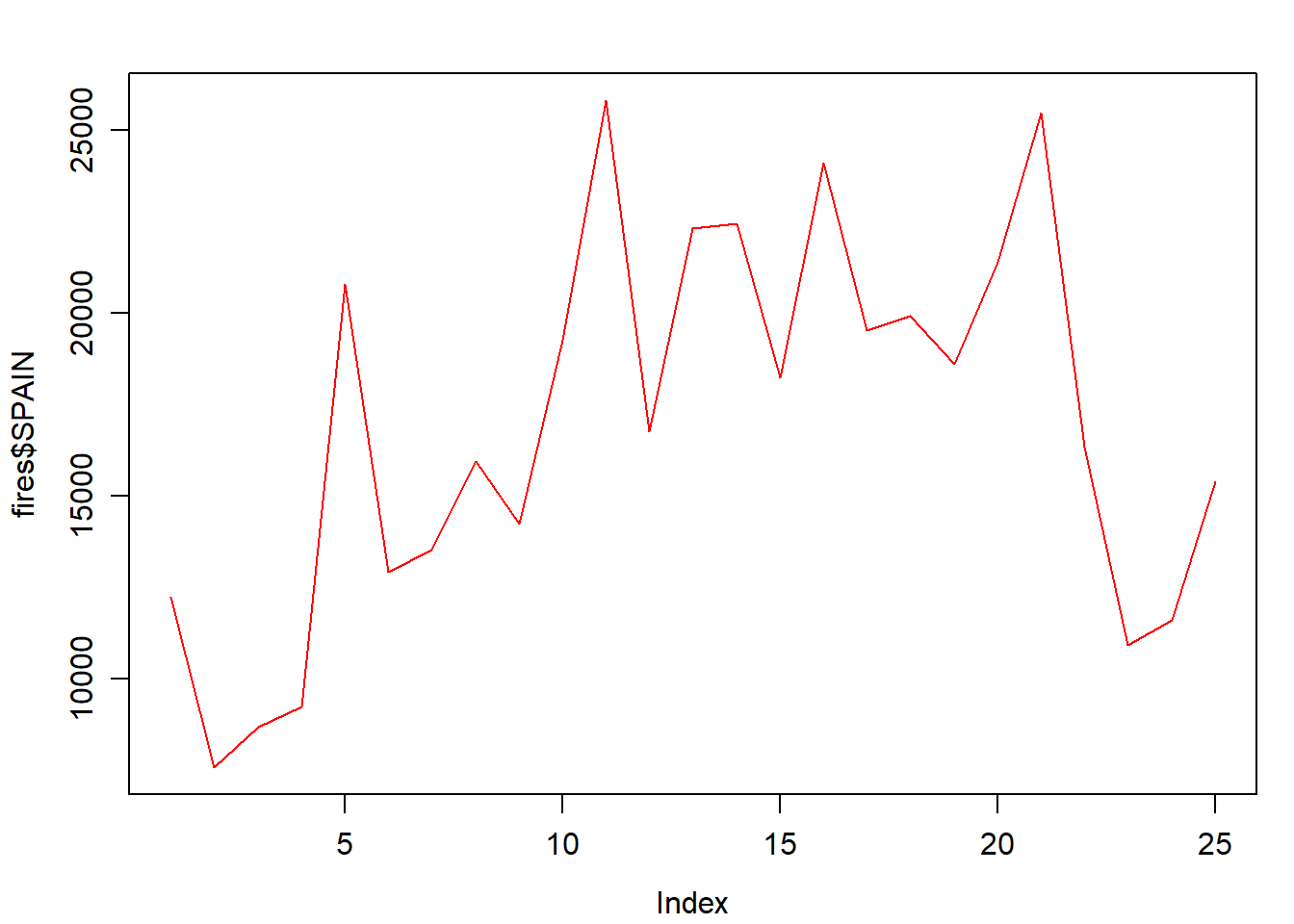

This is one of the most basic types of graphics if not the most basic one we can create. To create such a graph we will use the plot() function. Let’s look at a simple example using data from fires.csv9:

As you can see, we are plotting data from the SPAIN column, ie, yearly fire occurrence data in Spain.

Remember RStudio displays plots in the right-bottom window. In addition, if you need to take closer look use the Zoom button to pop-up a new plot window.

Le’s tune up and enhance our plot. We can change the symbol type using the pch argument. You’ll find a list of symbol types (not just dot charts) at http://www.statmethods.net/advgraphs/parameters.html.

We can change symbol size using cex:

And we can change the color of the symbol with col:

The col argument can be specified either using the color name as in the example or using its code number, hexadecimal or RGB so that col = 1, col = "white", and col = "#FFFFFF" are equivalent. In some types of symbols we can also change the color of the symbol background in addition to the symbol itself using the argument bg:

The finish our plot we will modify axis labels, add a title and a legend:

par(mar=c(3.5, 3.5, 2, 1), mgp=c(2.4, 0.8, 0))

plot(fires$SPAIN,pch=21,cex=1,col='black',bg='red',

main='Number of fires in Spain',ylab = 'Number of fires',xlab = '')

Note that we have used the xlab argument to leave the x-axis label blank. Now we add the legend. It is important you bear in mind that the legend is added with an additional command right after the plot statement. Legends in basic plots are just an image we add to an existing plot by emulating the symbol used in that plot using the legend() function:

par(mar=c(3.5, 3.5, 2, 1), mgp=c(2.4, 0.8, 0))

plot(fires$SPAIN,pch=21,cex=1,col='black',bg='red',

main='Number of fires in Spain',ylab = 'Number of fires',xlab = '')

legend( "topleft" , cex = 1.3, bty = "n", legend = c("Spain"), , text.col = c("black"), col = c("red") , pt.bg = c("red") , pch = c(21) )

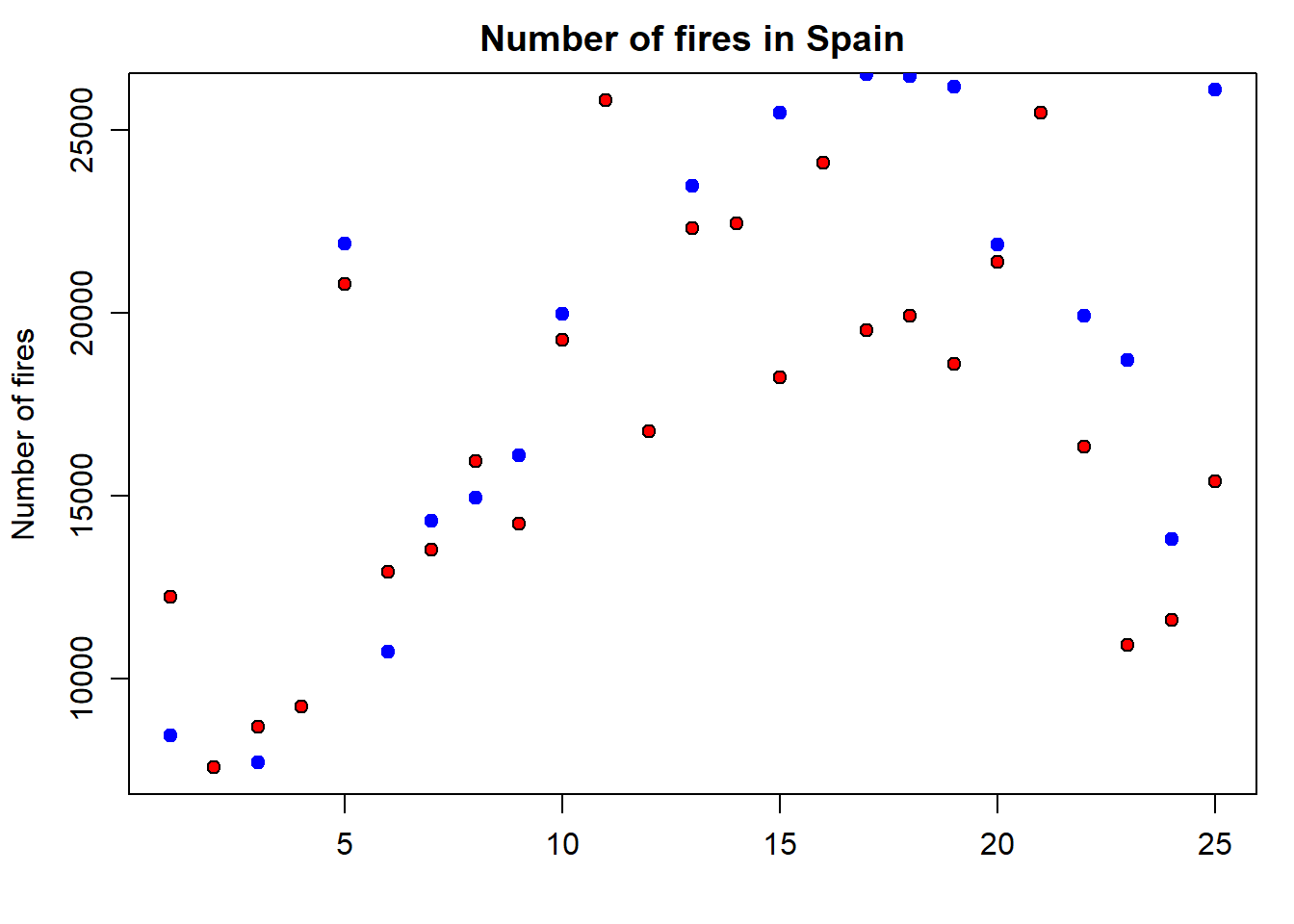

But, what if we want to add a second series of data? Then we proceed in a way similar to the legend() statement, but using the functionpoints() similar to what we have seen in the plot() example. In the following code we add a second point data series with portugal:

par(mar = c(3.5, 3.5, 2, 1), mgp = c(2.4, 0.8, 0))

plot(

fires$SPAIN,

pch = 21,

cex = 1,

col = 'black',

bg = 'red',

main = 'Number of fires in Spain',

ylab = 'Number of fires',

xlab = ''

)

points(

fires$PORTUGAL,

pch = 21,

cex = 1,

col = "blue",

bg = "blue"

)

Finally, we update the legend to fit the new plot with the second series of data. To do that we simply include a second value on each argument using a vector:

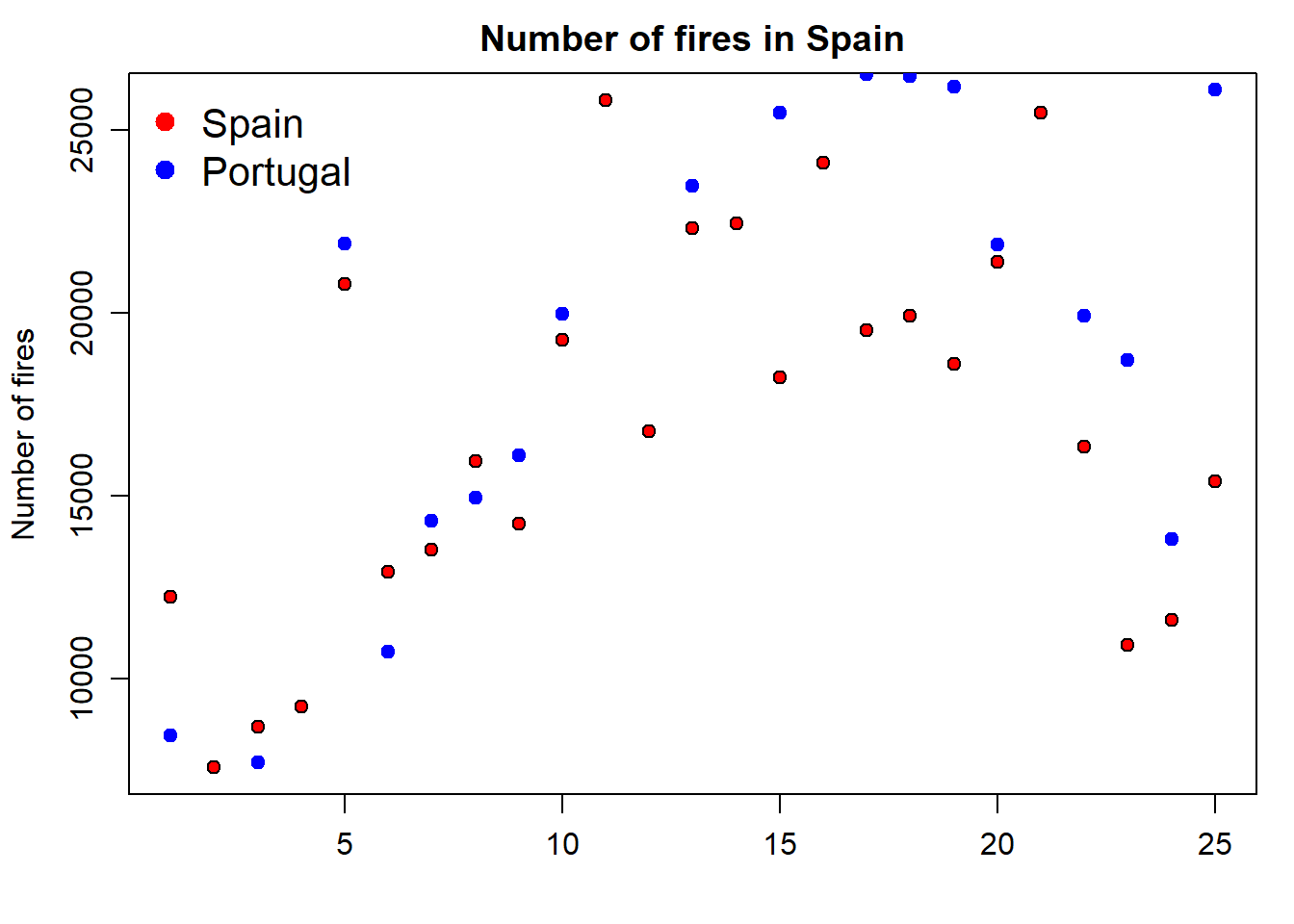

par(mar=c(3.5, 3.5, 2, 1), mgp=c(2.4, 0.8, 0))

plot(fires$SPAIN,pch=21,cex=1,col='black',bg='red',

main='Number of fires in Spain',ylab = 'Number of fires',xlab = '')

points(fires$PORTUGAL,pch=21,cex=1,col="blue",bg="blue")

legend( "topleft" , cex = 1.3, bty = "n", legend = c("Spain","Portugal"), , text.col = c("black"), col = c("red","blue") , pt.bg = c("red","blue") , pch = c(21) )

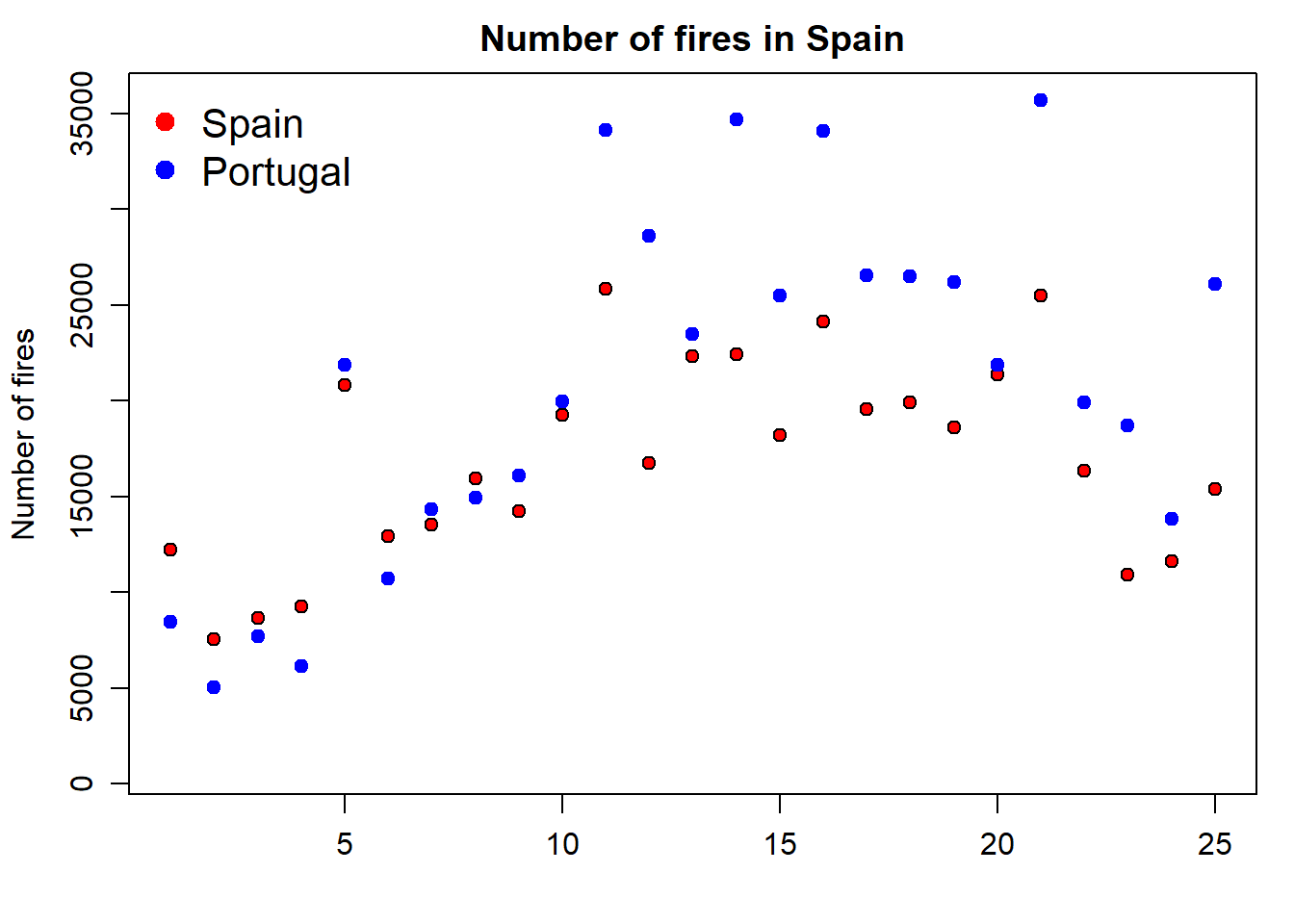

The problem we are now experiencing is that data for Portugal doesn’t fit in the extent of the plot as it is. We should modify this using the xlim and ylim arguments. At this point x-axis works fine, so we’ll leave it as it is. The problem comes from y-axis. We can solve it by passing the ylim argument passing the minimum and maximum values of the fires data. We bring here some functions from Descriptive statistics and summaries.

par(mar = c(3.5, 3.5, 2, 1), mgp = c(2.4, 0.8, 0))

plot(

fires$SPAIN,

pch = 21,

cex = 1,

col = 'black',

bg = 'red',

main = 'Number of fires in Spain',

ylab = 'Number of fires',

xlab = '',

ylim = c(min(fires[, 2:6]), max(fires[, 2:6]))

)

points(

fires$PORTUGAL,

pch = 21,

cex = 1,

col = "blue",

bg = "blue"

)

legend(

"topleft" ,

cex = 1.3,

bty = "n",

legend = c("Spain", "Portugal"),

,

text.col = c("black"),

col = c("red", "blue") ,

pt.bg = c("red", "blue") ,

pch = c(21)

)

📝EXERCISE 3: Explain in detail how the statement ylim = c(min(fires[,2:6]),max(fires[,2:6])) works in terms of the max() and min() functions and its interaction with the firesobject.

Deliverable:

Write a brief report describing the working procedure of the aforementioned instruction.

Well, this is quite easy. In line plots we use lines to represent our data series instead of points which are the default symbol. How do we do that? Just adding an additionla argument to specify we want to use lines with type = 'l':

par(mar = c(3.5, 3.5, 2, 1), mgp = c(2.4, 0.8, 0))

plot(fires$SPAIN,

pch = 4,

type = 'l',

col = 'red')

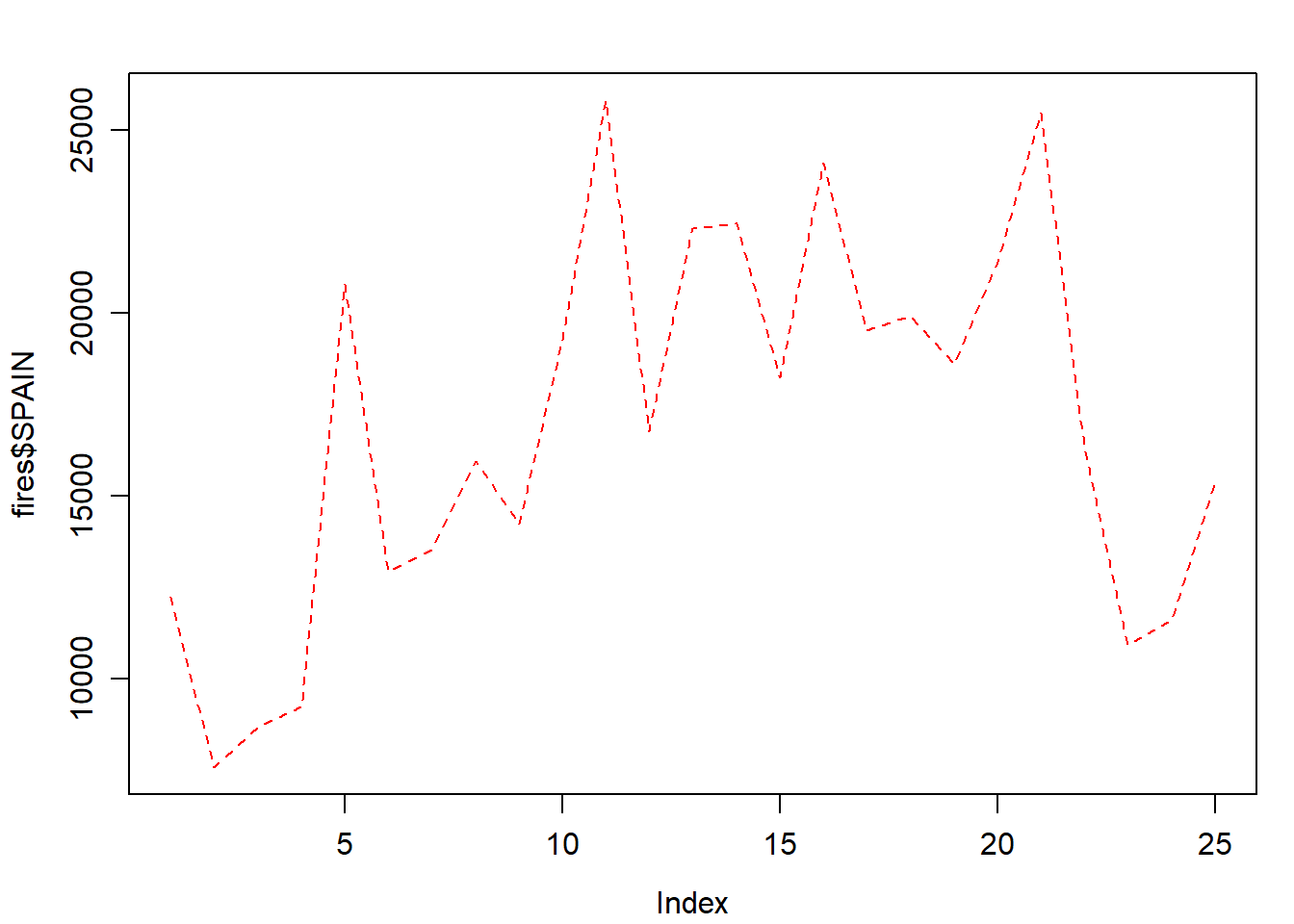

Of course we can change the line style:

par(mar = c(3.5, 3.5, 2, 1), mgp = c(2.4, 0.8, 0))

plot(

fires$SPAIN,

pch = 4,

type = 'l',

lty = 2,

col = 'red'

)

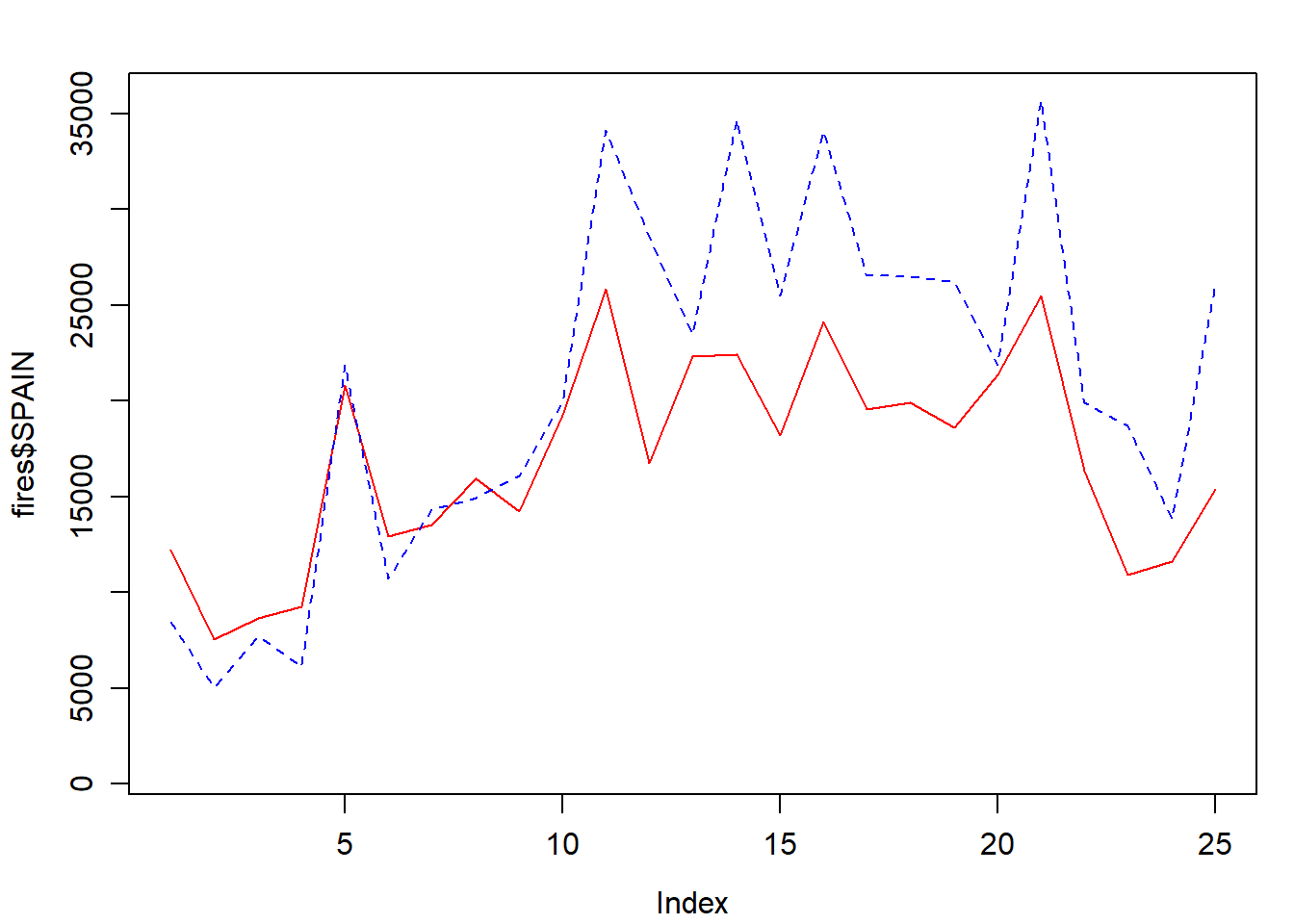

Adding a second (or third, fourth,…,$n$) series is done withlines():

par(mar = c(3.5, 3.5, 2, 1), mgp = c(2.4, 0.8, 0))

plot(

fires$SPAIN,

pch = 4,

type = 'l',

col = 'red',

ylim = c(min(fires[, 2:6]), max(fires[, 2:6]))

)

lines(fires$PORTUGAL,

cex = 1,

col = "blue",

lty = 2)

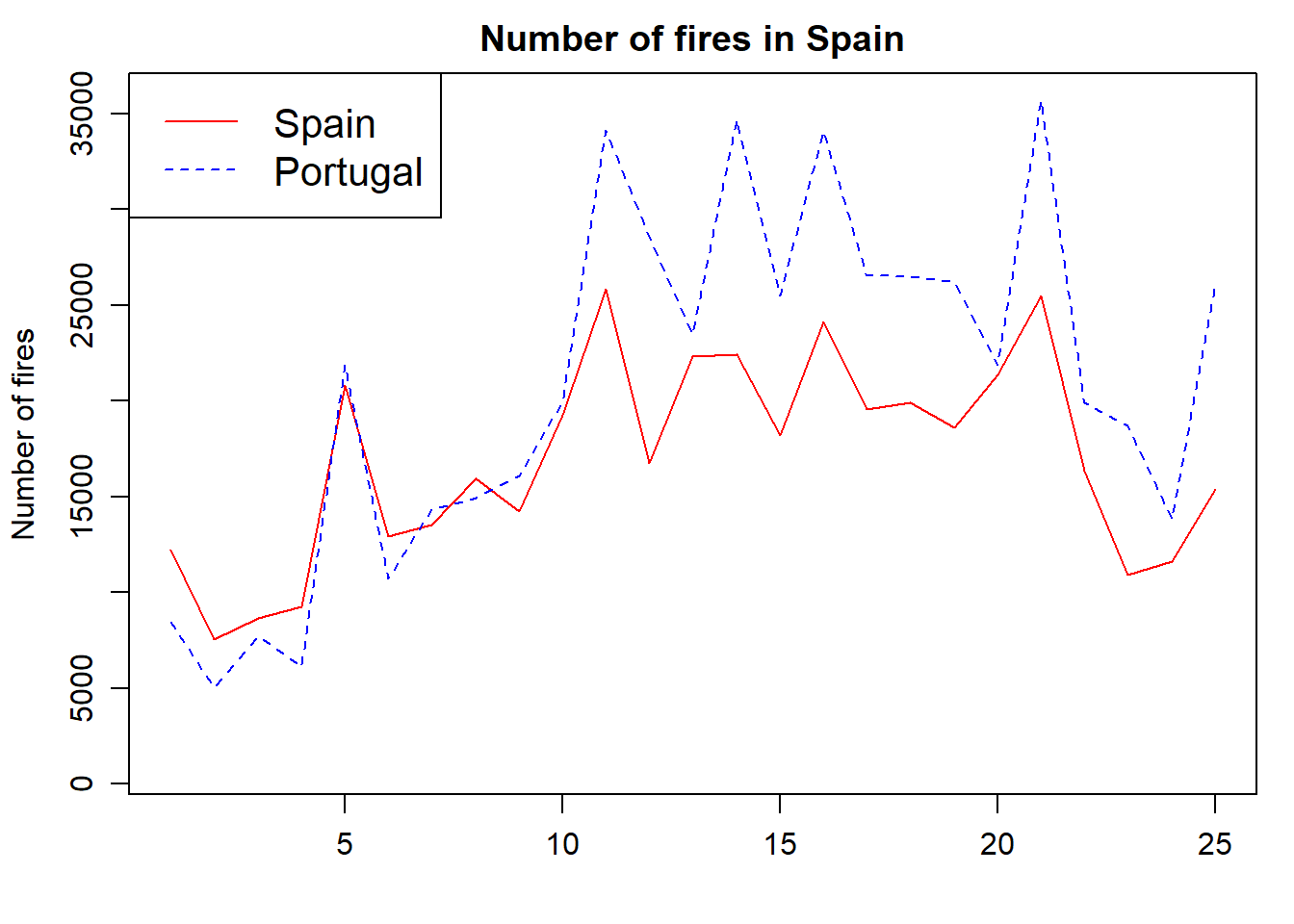

We now adapt the legend and add some titles and we are good to go:

par(mar = c(3.5, 3.5, 2, 1), mgp = c(2.4, 0.8, 0))

plot(

fires$SPAIN,

pch = 4,

type = 'l',

col = 'red',

main = 'Number of fires in Spain',

ylab = 'Number of fires',

xlab = '',

ylim = c(min(fires[, 2:6]), max(fires[, 2:6]))

)

lines(fires$PORTUGAL,

cex = 1,

col = "blue",

lty = 2)

legend(

"topleft" ,

cex = 1.3,

,

lty = c(1, 2),

legend = c("Spain", "Portugal"),

text.col = c("black"),

col = c("red", "blue")

)

The next type of chart we will see is the frequency histogram. It is a bar chart that represents the number of elements of a sample (frequency) that we find within a certain range of values.

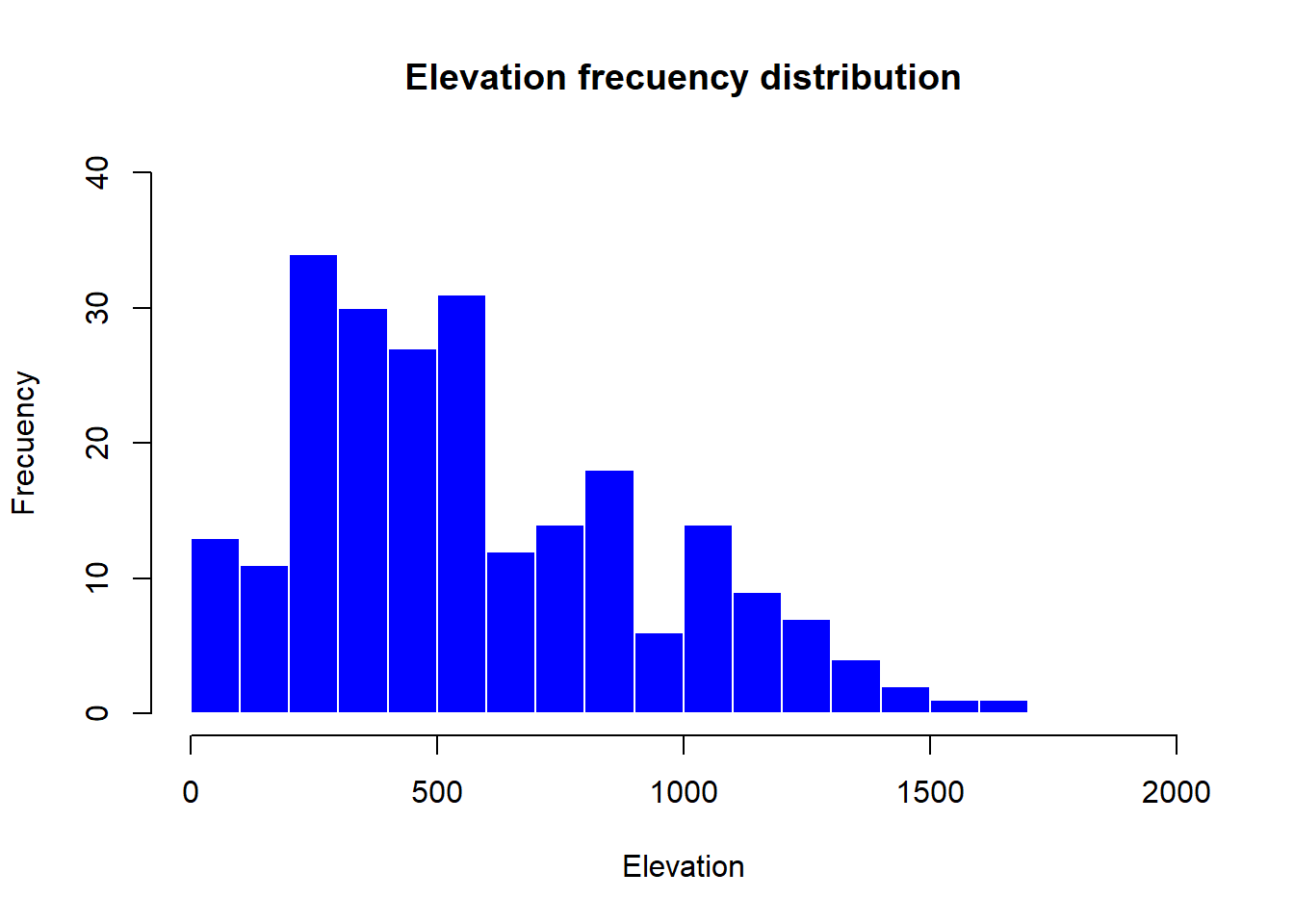

To draw this type of plot R uses the function hist(), which requires as a mandatory argument a vector (or column/row of an array) with the data to be represented. As with all other chart types we have seen, we can use main, xlab… Let’s see at an example using the example data from the regression.txt file10 (See Table 4.1):

regression <- read.table(

"./data/Module_1/regression.txt",

header = TRUE,

sep = '\t',

dec = ","

)

DT::datatable(regression,

options = list(pageLength = 12),

caption = "Table 4.1 Structure of the regression.txt file.")| TavgMAX | Tavg | long | d_atl | d_medit | lat | elevation | |

|---|---|---|---|---|---|---|---|

| 1 | 20.3 | 14.55 | 614350 | 305753 | 152147 | 4489750 | 1423 |

| 2 | 23.8 | 16.1 | 593950 | 271251 | 183121 | 4522150 | 1100 |

| 3 | 24.4 | 18.15 | 567250 | 279823 | 204123 | 4512650 | 1229 |

| 4 | 20.1 | 14.05 | 609150 | 319257 | 150357 | 4475450 | 1610 |

| 5 | 20.3 | 13.85 | 629450 | 306673 | 138390 | 4491350 | 1402 |

| 6 | 21.9 | 16.2 | 666750 | 317312 | 103530 | 4489850 | 1022 |

| 7 | 24.4 | 17.35 | 659450 | 335694 | 102739 | 4468550 | 882 |

| 8 | 20.9 | 15.45 | 654050 | 347119 | 101473 | 4455350 | 828 |

| 9 | 21.2 | 15.2 | 666850 | 359349 | 85698 | 4446050 | 1308 |

| 10 | 24 | 17.3 | 654150 | 369714 | 91221 | 4432150 | 1186 |

| 11 | 26.8 | 19.8 | 668050 | 398728 | 71861 | 4405650 | 589 |

| 12 | 28.1 | 21.65 | 671750 | 402740 | 67917 | 4402450 | 522 |

Tavg_max: maximum average temperature in June.

Tavg: average temperature in June.

long: longitude in UTM values EPSG:23030.

lot: latitude in UTM values EPSG:23030.

d_atl: distance in meters to the Atlantic sea.

d_medit: distance in meters to the Mediterranean sea.

elevation: elevation above sea level in meters.

Then we use the arguments we have seen to customize the plot:

hist(

regression$elevation,

breaks = 15,

main = "Elevation frecuency distribution",

xlab = "Elevation",

ylab = "Frecuency",

col = "blue",

border = "white",

ylim = c(0, 40),

xlim = c(0, 2000)

)

The only new parameters are:

breaks, used to specify the number of bars in the histrogram.

border, used the change the color of bar’s borders.

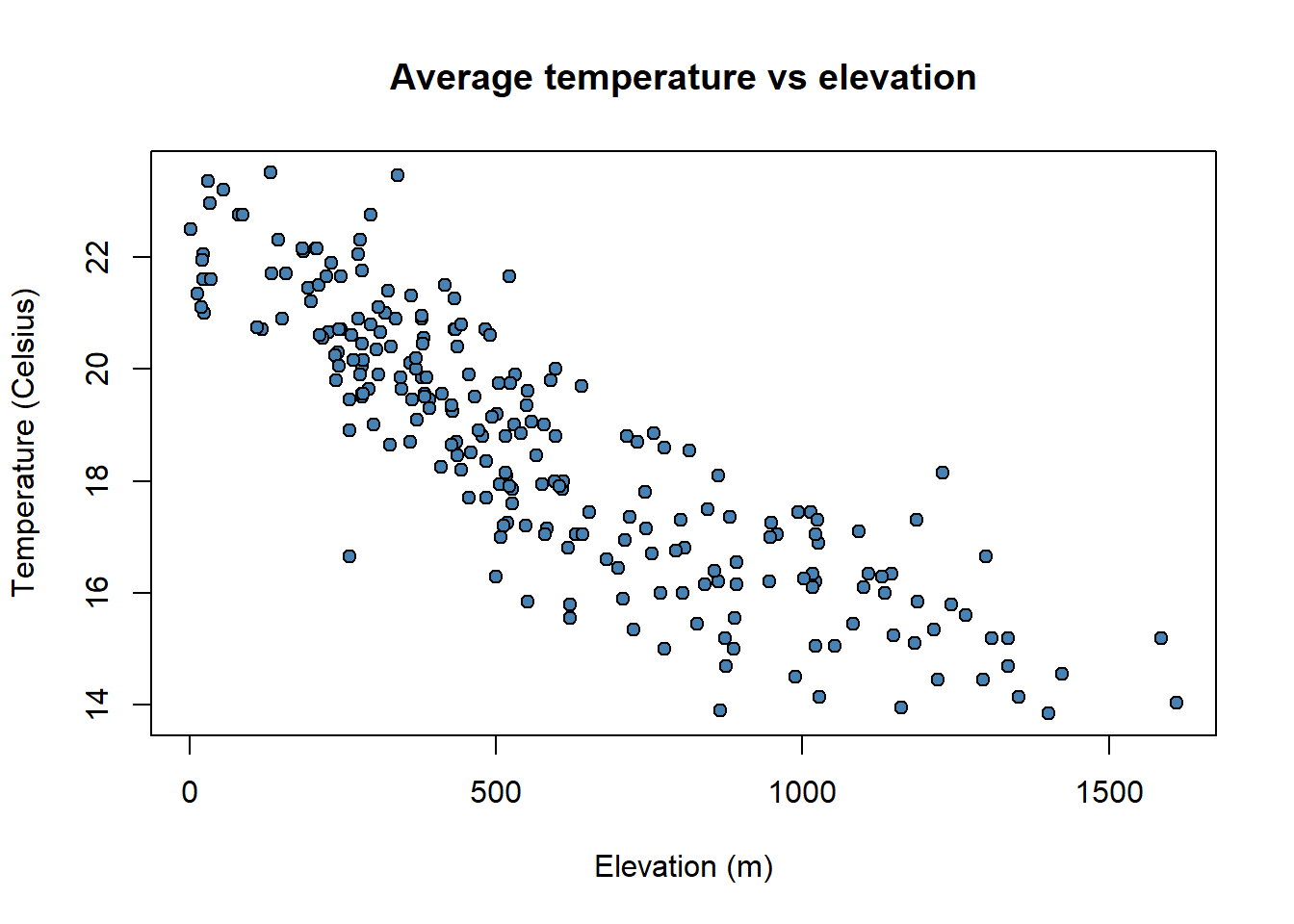

So far we have seen how to construct univariate graphs, ie, represent a single variable or data series. Next we will see a type of bivariate graph, the scatterplot. This type of chart is interesting to visualize relations between two variables, almost mandatory to explore correlation or collinearity in regression analysis. Let’s look at an example with our fire data.

In this case we introduce in the function plot() a second data argument (

Note that the first data series goes to

plot(

regression$elevation,

regression$Tavg,

main = 'Average temperature vs elevation',

ylab = 'Temperature (Celsius)',

xlab = 'Elevation (m)',

pch = 21,

col = 'black',

bg = 'steelblue'

)

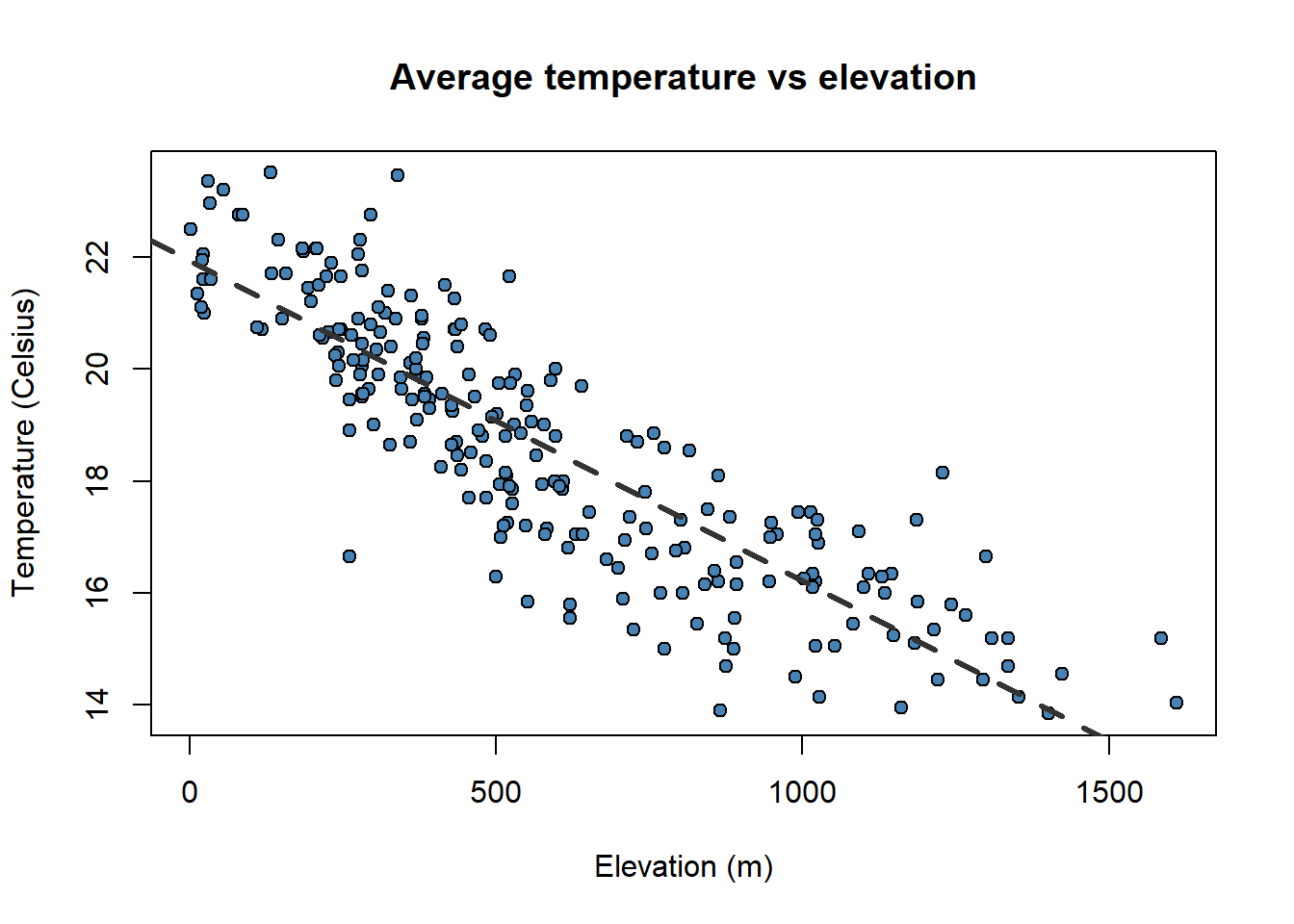

A particularly useful function in combination withscatterplotsisabline()which allows to incorporate a trend lineto the plot. We will further explore this later.

plot(

regression$elevation,

regression$Tavg,

main = 'Average temperature vs elevation',

ylab = 'Temperature (Celsius)',

xlab = 'Elevation (m)',

pch = 21,

col = 'black',

bg = 'steelblue'

)

abline(

lm(regression$Tavg ~ regression$elevation),

lty = 2,

col = 'gray20',

lwd = 3

)

Pay attention to the order in which we have introduced the plot()andabline(). It is the opposite!!.

To finish with plot creation we will see two last possibilities. The first one is how to combine several charts in a single figure and the second how to export an image file from our graphics.

Combining several graphs in R is possible thanks to the function par(mfrow = c(rows, columns)). Using this function we prepare the display window to include several graphs simultaneously:

Since R runs on so many different operating systems, and supports so many different graphics formats, it’s not surprising that there are a variety of ways of saving your plots, depending on what operating system you are using, what you plan to do with the graph, and whether you’re connecting locally or remotely.

The first step in deciding how to save plots is to decide on the output format that you want to use. The following table lists some of the available formats, along with guidance as to when they may be useful.

Here’s a general method11 that will work on any computer with R, regardless of operating system or the way that you are connecting.

Choose the format that you want to use. In this example, I’ll save a plot as a JPG file, so I’ll use the jpeg driver.

The only argument that the device drivers need is the name of the file that you will use to save your graph. Remember that your plot will be stored relative to the current directory. You can find the current directory by typing getwd() at the R prompt. You may want to make adjustments to the size of the plot before saving it. Consult the help file for your selected driver to learn how.

Now enter your plotting commands as you normally would. You will not actually see the plot - the commands are being saved to a file instead.

When you’re done with your plotting commands, enter the dev.off() command. This is very important - without it you’ll get a partial plot or nothing at all. So if we wanted to save a jpg file called “rplot.jpg” containing a plot of x and y, we would type the following commands:

jpeg('rplot.jpg',

width = 800,

height = 600,

res = 100)

# Here goes the plot

par(

mfrow = c(2, 1),

mar = c(3.5, 3.5, 2, 1),

mgp = c(2.4, 0.8, 0)

)

plot(

fires$SPAIN,

pch = 4,

type = 'l',

col = 'red',

ylim = c(min(fires[, 2:6]), max(fires[, 2:6]))

)

plot(

fires$PORTUGAL,

type = 'l',

col = "blue",

lty = 2,

ylim = c(min(fires[, 2:6]), max(fires[, 2:6]))

)

dev.off()png

2 Before finishing the module we would like to give you some insights into the main concepts you should have become familiar with.

You must have mastered object creation and manipulation, mainly table-like objects (array and data.frame) and vectors.

It is not that important to remember the specific syntax of the functions we have covered here but to realize the we can combine objects with function arguments.

We can create scripts to do specific tasks, we do not need to insert instructions one by one manually.

R Core Team. 2021. R: A Language and Environment for Statistical Computing. Vienna, Austria: R Foundation for Statistical Computing. https://www.R-project.org/.

In this module we will see how to fit linear regression models. This will serve as a basis for later adjusting models based on other error distributions (Poisson or logistic), focusing also on the spatialization of the results and interact with spatial information.

Regression is a mathematical method that models the relationship between a dependent variable and a series of independent variables (“Front Matter” 2014). The regression methods allow modeling the relationship between a dependent variable (term

In the case of linear regression models, the relationship between the dependent variable and the explanatory variable is linear (thus correctly specified), so that the relation is expressed as a first-order polynomial.

The underlying mathematical procedure is based on the Ordinary Least Squares, so it is common to refer to linear regression using this expression or its English equivalent OLS. In statistics, ordinary least squares (OLS) or linear least squares is a method for estimating the unknown parameters in a linear regression model, with the goal of minimizing the sum of the squares of the differences between the observed responses (values of the variable being predicted) in the given dataset and those predicted by a linear function of a set of explanatory variables. The OLS model can be expressed through the following equation:

Where:

What has to be clear is that we can predict or model the values of a certain variable

There are a number of conditions that our data has to meet in order to apply a linear regression model:

Correct specification. The linear functional form is correctly specified.

Strict exogeneity. The errors in the regression should have conditional mean - No linear dependence. The predictors in

Spherical errors: Homoscedasticity a no autocorrelation in the residuals.

Normality. It is sometimes additionally assumed that the errors have multivariate normal distribution conditional on the regressors.

If you are not familiar with the OLS just keep in mind that we cannot apply the method to any sample of data. Our data has to meet some conditions that we will explore little by little.

Most of the existing regression methods are currently available in R. The calibration of linear regression models with R is possible thanks to the stats package, installed by default in the basic version of R.

For the calibration of linear models either simple (one predictor) or multiple (several predictors) we will use the function lm(). A quick look at the help of the function (help('lm')) will allow us to know how the function works:

Of all the arguments of the function we are going to focus on the first two (formula and data), since they are the indispensable ones to be able to adjust not just linear regression but any regression or classification model in R. The data argument is used to specify the table (use data.frame object) with the information of our independent variables. Using the formula argument, we specify in the model which of our variables is the dependent variable, and which independent variables in our data.frame object we want to use to carry out the regression. Let’s take a look at the formula first:

💡v_dep ~ v_indep1 + v_indep2 + . + v_indepn

The formual is composed by two blocks of information separated with the ~ operator. On the left we found the dependent variable specified using its name as it appears on the dataobject. On the right we found the independent variables joined with the + operator. Same as with the dependent one, the independent varibles must be called using their name as it is in data.

We will normally use the above described approach with one exception. Provided we have a data object which only contains a column with our dependent variable and the remaining columns as predictors we can use . after ~ to declare that all the columns in data with the only exception of the dependent variables should be taken as predictors:

💡v_dep ~ .

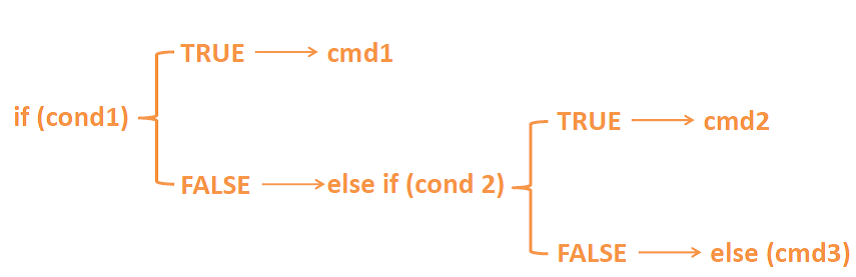

The sintax of the formula argument is the same regardless of the kind of regression or classification model we want to use: - Generalized Linear Models - Regression and Classification Trees - Random Forest - Artificial Neural Networks - …

Let us now see a simple example of model fitting. We will use the data stored in the file regression.txt that we already used in one of the examples of plot creation. I will import the text file with read.table() and store the data in an object called regression

| TavgMAX | Tavg | long | d_atl | d_medit | lat | elevation | |

|---|---|---|---|---|---|---|---|

| 1 | 20.3 | 14.55 | 614350 | 305753 | 152147 | 4489750 | 1423 |

| 2 | 23.8 | 16.1 | 593950 | 271251 | 183121 | 4522150 | 1100 |

| 3 | 24.4 | 18.15 | 567250 | 279823 | 204123 | 4512650 | 1229 |

| 4 | 20.1 | 14.05 | 609150 | 319257 | 150357 | 4475450 | 1610 |

| 5 | 20.3 | 13.85 | 629450 | 306673 | 138390 | 4491350 | 1402 |

| 6 | 21.9 | 16.2 | 666750 | 317312 | 103530 | 4489850 | 1022 |

| 7 | 24.4 | 17.35 | 659450 | 335694 | 102739 | 4468550 | 882 |

| 8 | 20.9 | 15.45 | 654050 | 347119 | 101473 | 4455350 | 828 |

| 9 | 21.2 | 15.2 | 666850 | 359349 | 85698 | 4446050 | 1308 |

| 10 | 24 | 17.3 | 654150 | 369714 | 91221 | 4432150 | 1186 |

Tavg_max: maximum average temperature in June.

Tavg: average temperature in June.

long: longitude in UTM values EPSG:23030.

lat: latitude in UTM values EPSG:23030.

d_atl: distance in meters to the Atlantic sea.

d_medit: distance in meters to the Mediterranean sea.

elevation: elevation above sea level in meters.

TavgMAX Tavg long d_atl

Min. :18.20 Min. :13.85 Min. :566050 Min. : 43783

1st Qu.:23.00 1st Qu.:16.80 1st Qu.:628925 1st Qu.:132564

Median :25.05 Median :18.80 Median :690750 Median :211925

Mean :24.79 Mean :18.64 Mean :687373 Mean :216455

3rd Qu.:26.90 3rd Qu.:20.55 3rd Qu.:738600 3rd Qu.:293938

Max. :30.50 Max. :23.50 Max. :817550 Max. :425054

d_medit lat elevation

Min. : 670 Min. :4399050 Min. : 2.0

1st Qu.: 92912 1st Qu.:4539325 1st Qu.: 302.0

Median :159376 Median :4638550 Median : 505.5

Mean :159368 Mean :4615250 Mean : 575.7

3rd Qu.:227630 3rd Qu.:4700250 3rd Qu.: 813.8

Max. :325945 Max. :4757850 Max. :1610.0 'data.frame': 234 obs. of 7 variables:

$ TavgMAX : num 20.3 23.8 24.4 20.1 20.3 21.9 24.4 20.9 21.2 24 ...

$ Tavg : num 14.6 16.1 18.1 14.1 13.8 ...

$ long : int 614350 593950 567250 609150 629450 666750 659450 654050 666850 654150 ...

$ d_atl : int 305753 271251 279823 319257 306673 317312 335694 347119 359349 369714 ...

$ d_medit : int 152147 183121 204123 150357 138390 103530 102739 101473 85698 91221 ...

$ lat : int 4489750 4522150 4512650 4475450 4491350 4489850 4468550 4455350 4446050 4432150 ...

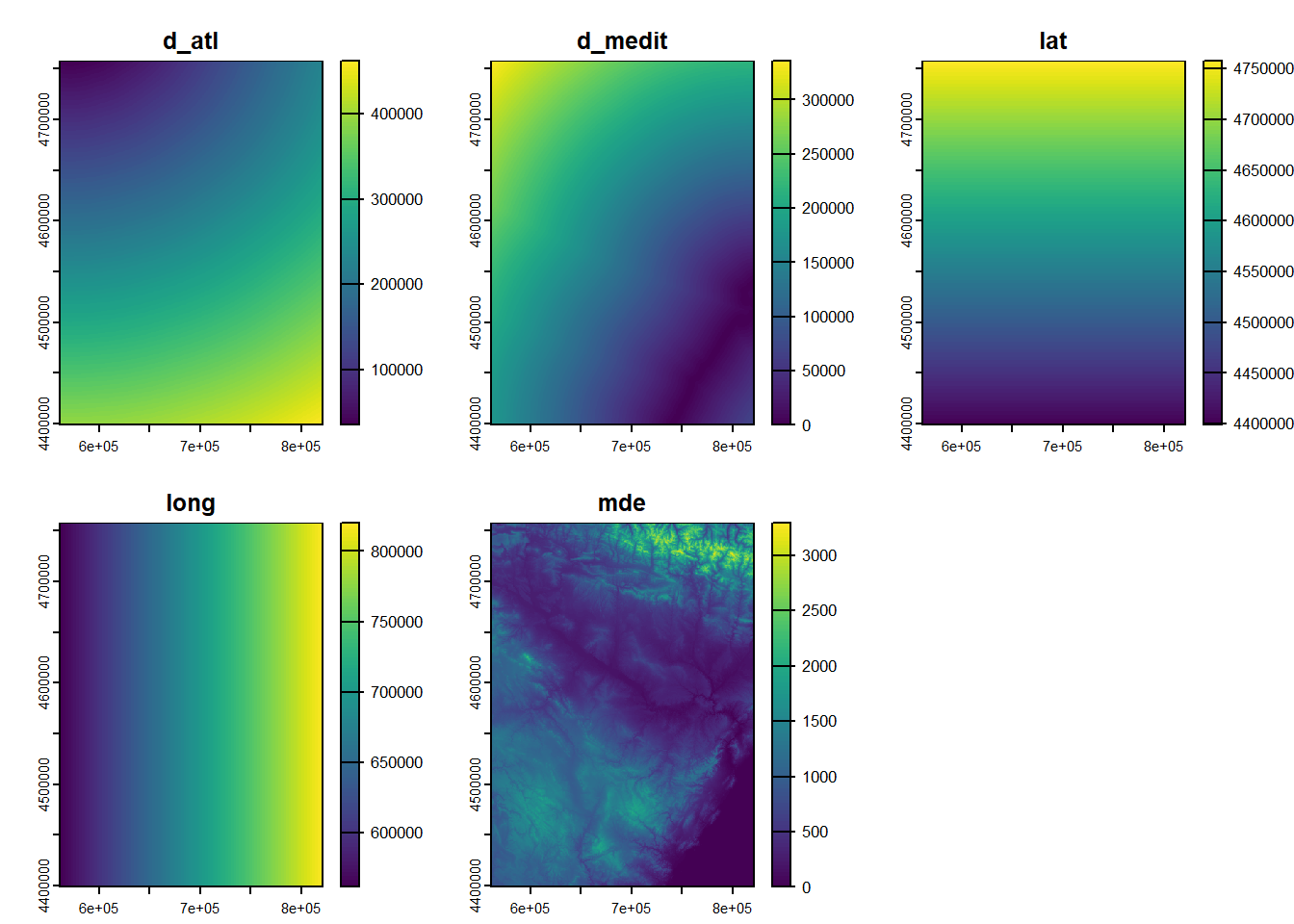

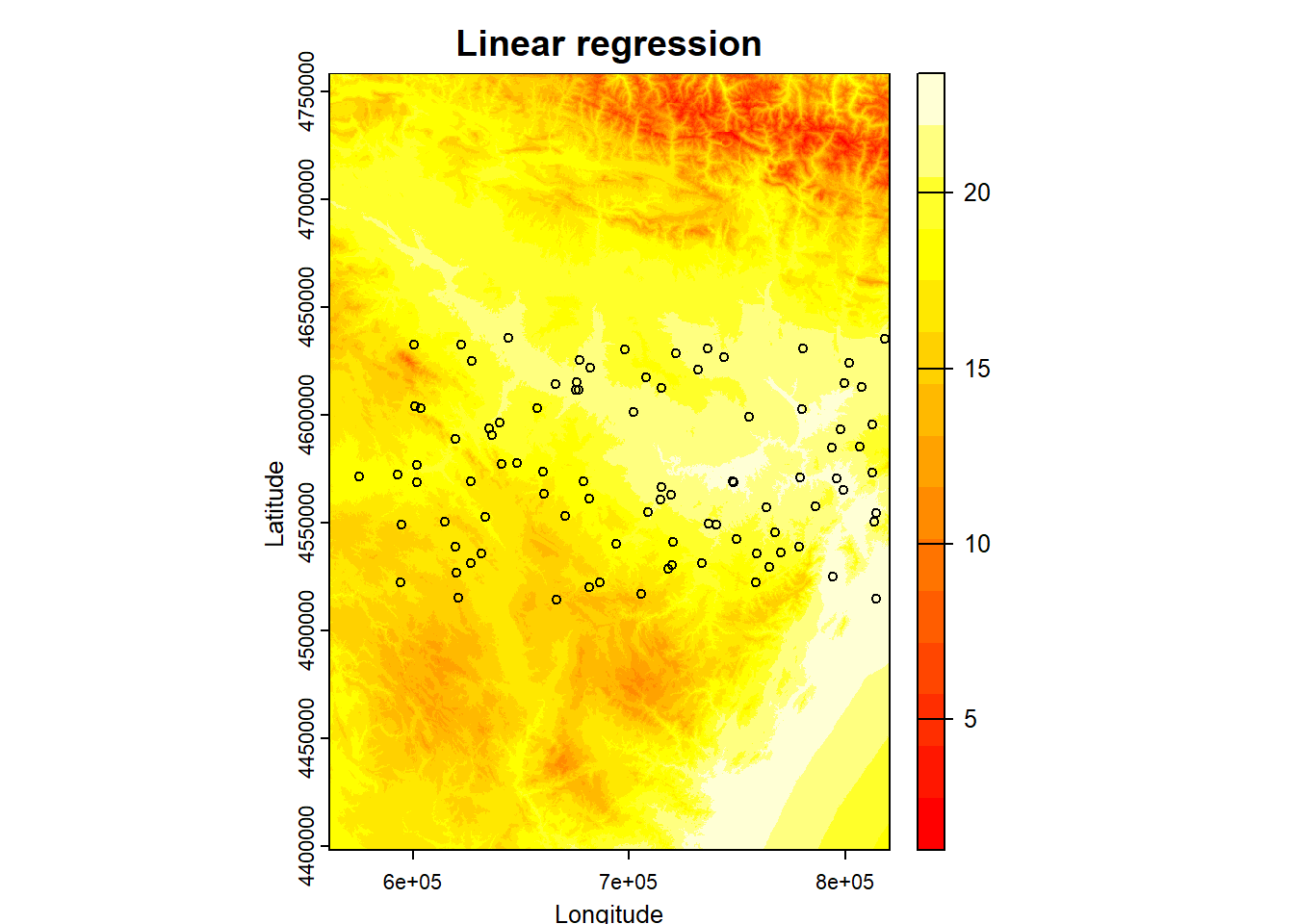

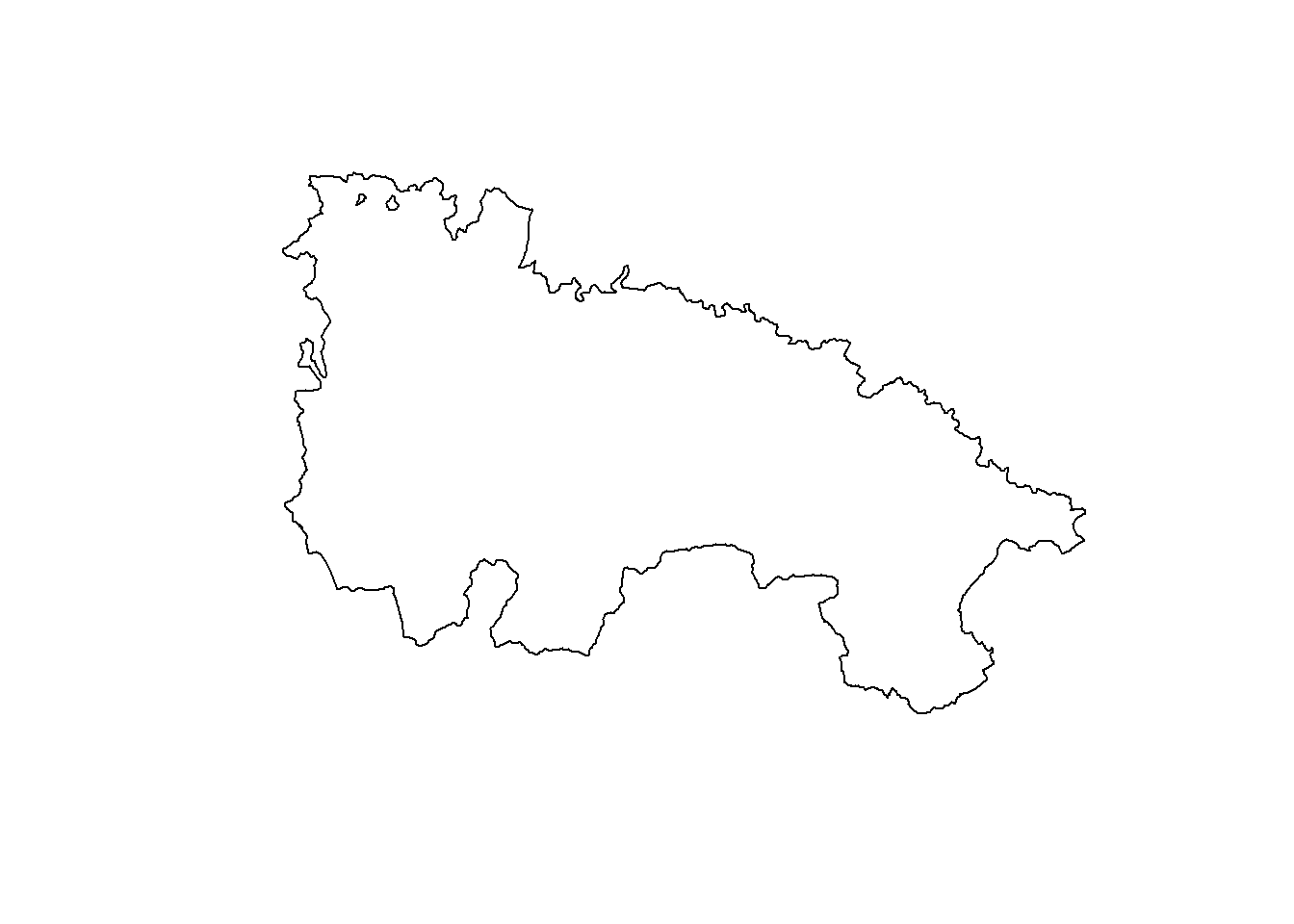

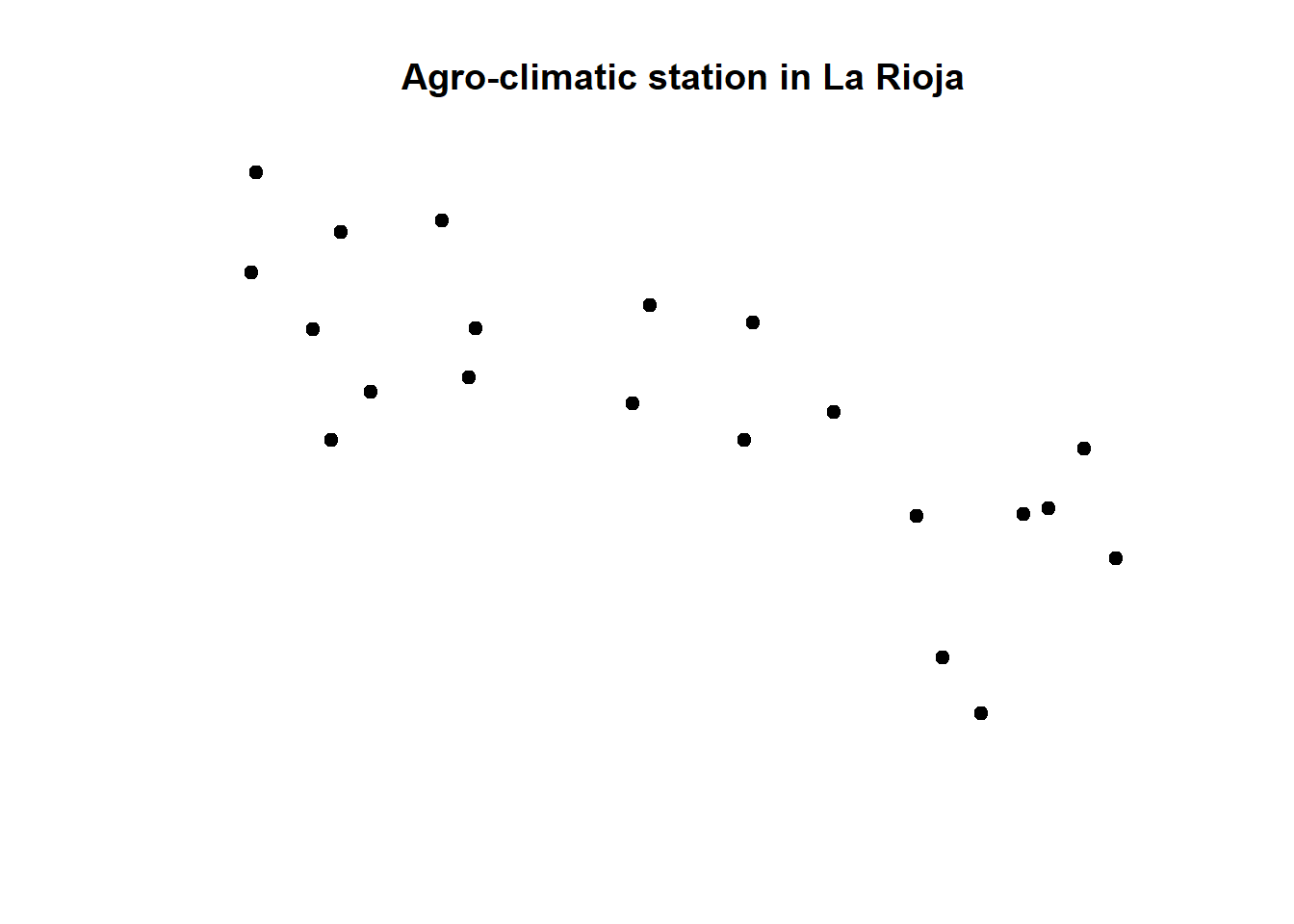

$ elevation: int 1423 1100 1229 1610 1402 1022 882 828 1308 1186 ...In regression.txt we have all the information necessary to calibrate12 a regression model, that is, variables that can operate as dependent (temperatures) and explanatory variables of the phenomenon to be modeled. Let’s see how to proceed to adjust the regression model and visualize the results. In this example we will use Tavg as the dependent variable and all the other as predictors (except Tavg_max):

Call:

lm(formula = Tavg ~ long + lat + d_atl + d_medit + elevation,

data = regression)

Coefficients:

(Intercept) long lat d_atl d_medit elevation

-4.315e+02 -8.919e-05 1.099e-04 8.516e-05 -6.996e-05 -5.492e-03 By executing this instruction we simply obtain the list of regression coefficients resulting from fitting the model. These coefficients give us some information. Strictly the linear regression coefficient gives as the unitary amount of change in the dependent variable on the basis of an increase of 1 of that particular predictor.

However, same as when executing any other function without saving the result, the result is showed in the terminal and lost afterwards. It is appropriate to store the result of the regression model in an object and then apply the summary() function to obtain more detailed results. This is particularly important in this type of functions since the objects generated have much more information than we would simply see in terminal with its execution.

Call:

lm(formula = Tavg ~ long + lat + d_atl + d_medit + elevation,

data = regression)

Residuals:

Min 1Q Median 3Q Max

-4.3020 -0.6010 0.0229 0.5023 2.9665

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) -4.315e+02 6.269e+01 -6.883 5.61e-11 ***

long -8.919e-05 1.299e-05 -6.864 6.26e-11 ***

lat 1.099e-04 1.531e-05 7.179 9.84e-12 ***

d_atl 8.516e-05 1.139e-05 7.476 1.63e-12 ***

d_medit -6.996e-05 1.120e-05 -6.245 2.05e-09 ***

elevation -5.492e-03 2.080e-04 -26.403 < 2e-16 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Residual standard error: 0.8881 on 228 degrees of freedom

Multiple R-squared: 0.8587, Adjusted R-squared: 0.8556

F-statistic: 277.1 on 5 and 228 DF, p-value: < 2.2e-16Using the function summary(), in addition to the results obtained by calling the object, we obtain additional information such as:

The significance threshold of the predictors Pr(>|t|).

The overall error of the model (residual).

The degree of adjustment of the model (

Below we will see a somewhat more detailed explanation of some of the results that will allow us to better understand the results of the model. The coefficients of the predictors and the constant (intercept) are found in Estimates. These are the values we would use in the linear regression formula to obtain the model prediction13. If the a coefficient is positive it means that the higher the value of that variable the higher temperature and vice versa.

The significance thresholds of the explanatory variables determine whether the variables we have used in our model are sufficiently related. We find them in the under the name t value. This value is the Student t test, and is used to determine if a variable is significant, ie if it has relevant influence on the regression model. R automatically sets whether a variable is significant or not through the Signif. Codes. If a variable is found non-significant we should drop it from our model.

The last of the results we will see is the adjustment of the model by means of the coefficient of determination. We will use the Adjusted R-squared value. This value determines the percentage of variance explained by the model. It can be translated, in order to understand it better, as the percentage of success, although it is not exactly that. For practical purposes, the higher this value, the better the model. Their values range from 0 to 1.

Once the model is adjusted, predictions can be made on the value of the dependent variable, as long as we have data from the independent variables. For this, the predict(model, data) function is used. From this function we can not only make predictions from our models but also allow us to carry out their validation.

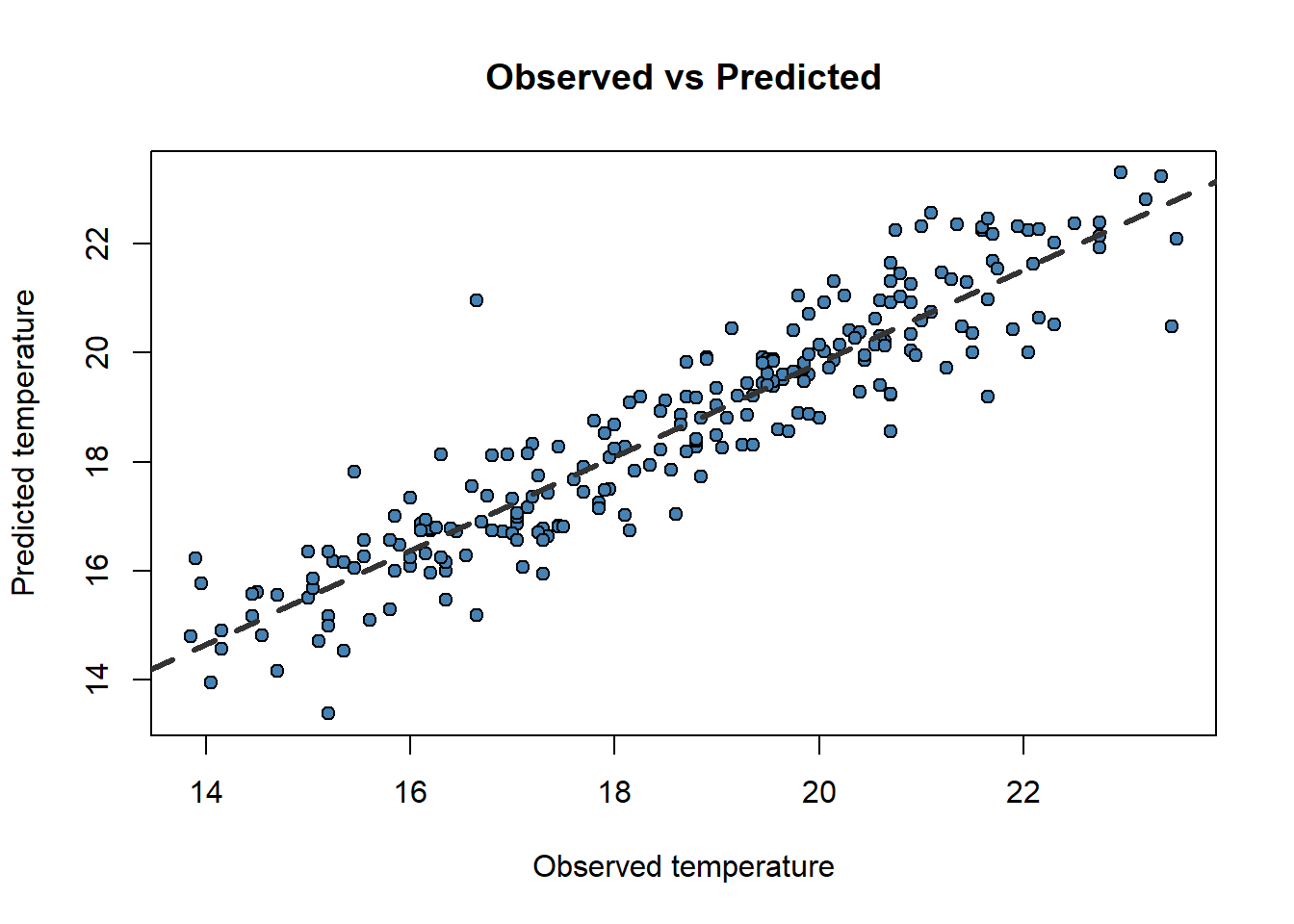

Let’s look at an example by applying predict() to the data matrix we used to fit the model (regression). We will compare the prediction with the value of the dependent variable (observed or actual data). In this way we can see how similar are the observed values (dependent variable) and the predicted values. However, the ideal is to apply this procedure with a sample of data that has not been used to calibrate the model, and thus to use it as a validation of the results. Generally, a random percentage of data from the total sample is usually reserved to carry out this process. We will do this later.

First we apply predict() and store the result in an object mod.pred. Since this function returns a vector with the predictions, the resulting object will be of type vector:

Then we combine the observed (dependent variable) and predicted data into a new object using cbind(). On the one hand we select the column with the dependent variable in the data object (regression) and on the other the prediction we just made (mod.pred). We save the result to a new object and rename the columns as observed and predicted.

obs.pred <- cbind(regression$Tavg, mod.pred)

colnames(obs.pred) <- c("observed", "predicted")

head(obs.pred) observed predicted

1 14.55 14.80975

2 16.10 16.85943

3 18.15 16.74862

4 14.05 13.94983

5 13.85 14.79505

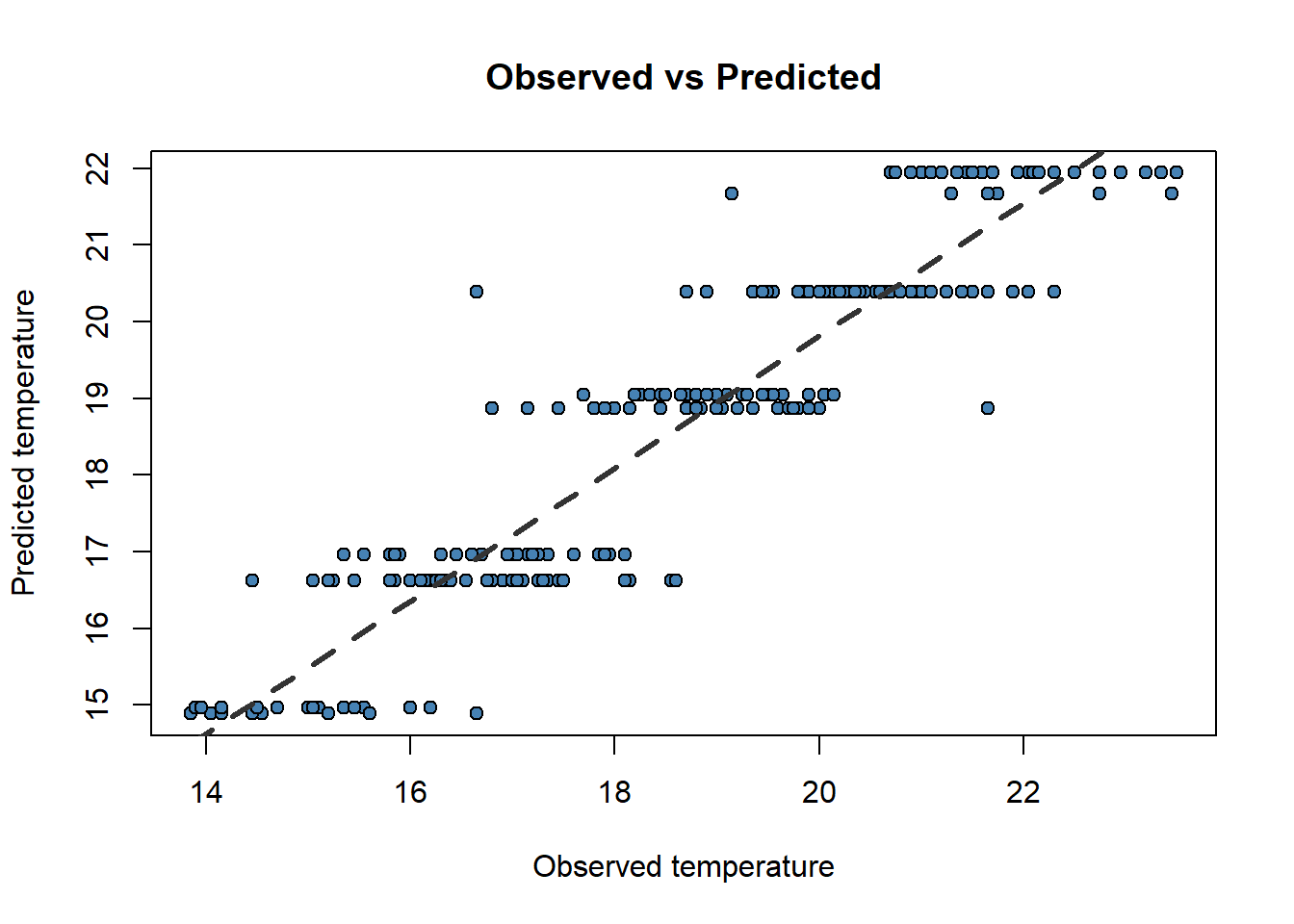

6 16.20 16.73546Now, do you remember scatterplots? They are pretty good way to see how well works our model. We can compare the prediction and the actual values like this:

obs.pred <- data.frame(obs.pred)

plot(

obs.pred$observed,

obs.pred$predicted,

main = 'Observed vs Predicted',

ylab = 'Predicted temperature',

xlab = 'Observed temperature',

pch = 21,

col = 'black',

bg = 'steelblue'

)

abline(

lm(obs.pred$predicted ~ obs.pred$observed),

lty = 2,

col = 'gray20',

lwd = 3

)

Now that weird lm() that you already noticed before makes a little more sense. We only can call abline on regression models. Ideally, the perfect fit would display all dots in a 45 degree line starting in (0,0). That would be the

Another thing we can take a look at is the Root Mean Square Error (RMSE). The RMSE is the standard deviation of the residuals (prediction errors14). Residuals are a measure of how far from the regression line data points are; RMSE is a measure of how spread out these residuals are. In other words, it tells you how concentrated the data is around the line of best fit (abline). Root mean square error is commonly used in climatology, forecasting, and regression analysis to verify experimental results. The formula is:

Where:

In this case we will create our own R formula obtained from this nice r-bloggers entry:

[1] 0.8766348So, taking into account that the RMSE takes the same units that the observed variable, our model predicts temperature with an average error of less than 1 Celsius degree.

One of the conditions our data must satisfy is the linear independence of our predictors. This means that our explanatory variables must be independent one another or what is the same, have low correlation values.

Correlation is a measure of the degree of association between to numeric variables. There are several methods to measure correlation both parametric (at least one variable must be normally distributed) and non-parametric (no assumptions in the data).

Parametric: Pearson correlation coefficient.

Non-parametric: Spearman’s ρ and Kendall’s τ rank correlation coefficients.

Regardless of the method, R uses the function cor() to calculate a coefficient of correlation. By default Pearson's R is calculated although the method argument allows to change the method to a non-parametric alternative.

TavgMAX Tavg long d_atl d_medit lat

TavgMAX 1.0000000 0.9384827 0.4416862 0.27237366 -0.4015863 -0.16677016

Tavg 0.9384827 1.0000000 0.4018594 0.29814899 -0.4287400 -0.22591063

long 0.4416862 0.4018594 1.0000000 0.57574513 -0.7589245 -0.29216294

d_atl 0.2723737 0.2981490 0.5757451 1.00000000 -0.9525821 -0.94604910

d_medit -0.4015863 -0.4287400 -0.7589245 -0.95258210 1.0000000 0.83632353

lat -0.1667702 -0.2259106 -0.2921629 -0.94604910 0.8363235 1.00000000

elevation -0.7789850 -0.8629255 -0.2375738 -0.01776881 0.1757007 -0.02359441

elevation

TavgMAX -0.77898498

Tavg -0.86292553

long -0.23757377

d_atl -0.01776881

d_medit 0.17570069

lat -0.02359441

elevation 1.00000000 TavgMAX Tavg long d_atl d_medit lat

TavgMAX 1.0000000 0.9430791 0.4582967 0.29512643 -0.4244320 -0.2627180

Tavg 0.9430791 1.0000000 0.4139180 0.30806962 -0.4375261 -0.3056250

long 0.4582967 0.4139180 1.0000000 0.58866202 -0.7589619 -0.3180236

d_atl 0.2951264 0.3080696 0.5886620 1.00000000 -0.9540432 -0.9390591

d_medit -0.4244320 -0.4375261 -0.7589619 -0.95404315 1.0000000 0.8499073

lat -0.2627180 -0.3056250 -0.3180236 -0.93905906 0.8499073 1.0000000

elevation -0.8044907 -0.8849362 -0.2816401 -0.09692695 0.2476985 0.1165831

elevation

TavgMAX -0.80449069

Tavg -0.88493622

long -0.28164007

d_atl -0.09692695

d_medit 0.24769852

lat 0.11658310

elevation 1.00000000Any correlation coefficient ranges from -1, indicating high inverse correlation15, to 1, indicating positive correlation. Values around 0 indicate no correlation.

Ideally, our predictors must be uncorrelated. Therefore, correlation values between -0.4 to 0.4 are rather acceptable. If correlation is higher we must drop one of those predictors from our regression model.

The usual thing is to create small scripts joining the necessary instructions to build a model that solves a specific problem. Both the standard R interface in Windows and RStudio provide interfaces for script development. If you work in terminal cmd you will have to use a text editor with ability to interpret code (Notepad++ for example). In RStudio we simply press the button under File (Figure 2.1) to access the scripting window and we will choose the option R Script:

Once this is done, just write the instructions that you want to execute in the appropriate order. Then select them and press the Run button (the green one like a Play button).

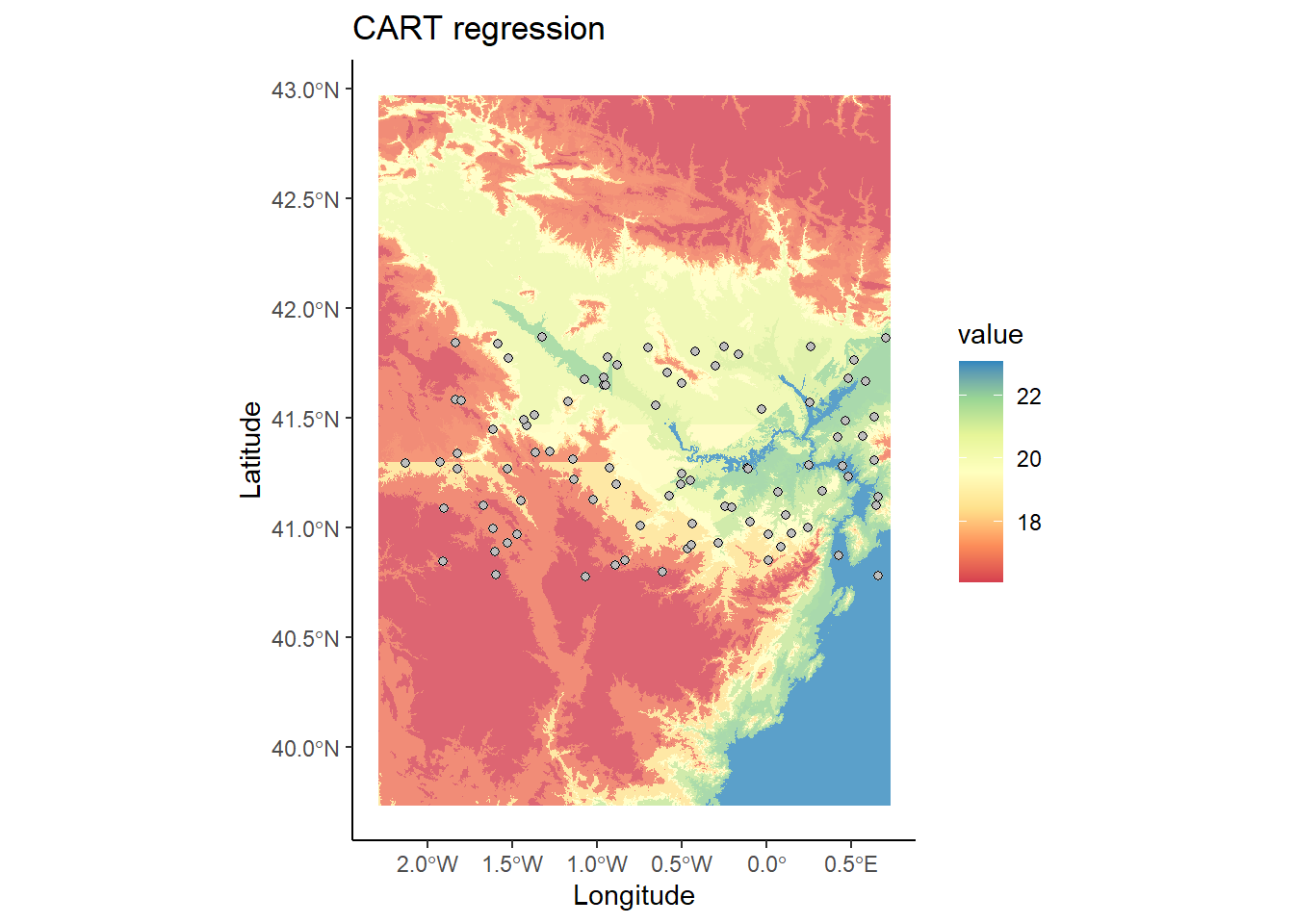

So far what we have done is simply to model the relationship between a dependent variable (mean temperature) and a series of explanatory variables. We have seen that we can make “predictions” from the adjusted models. This implies that if we had spatially distributed information about the explanatory variables (raster layers for example) we could generate maps of mean temperature values from the regression model. Later we will see how to do this hand in hand with some packages for manipulation of spatial information.

As we know, regression analysis is a statistical technique to study the relationship between variables. We have seen a basic example where we have calibrated a linear regression model to predict temperatures from some explanatory variables.

In the case of linear regression, the relationship between the dependent variable and the explanatory variables has a linear profile, so the result is the equation of a line. This type of regression implies a series of assumptions our data must fulfill. Specifically, it is necessary to comply with the following:

Correct specification. The linear functional form is correctly specified.

Strict exogeneity. The errors in the regression should have conditional mean

No linear dependence. The predictors in

Spherical errors. Homoscedasticity and no autocorrelation in the residuals.

Normality. It is sometimes additionally assumed that the errors have multivariate normal distribution conditional on the regressors.

As be have already seen, correlation analysis and scatterplots are a useful tool for ascertaining whether or not the assumption of independence of explanatory variables is met. We are now going to find out whether our data fits the normal distribution.

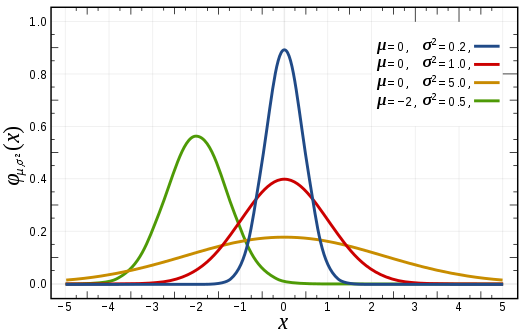

A continuous random variable,

The variable can take any value (−∞,+∞)

The density function is the expression in terms of the mathematical equation of the Gaussian curve:

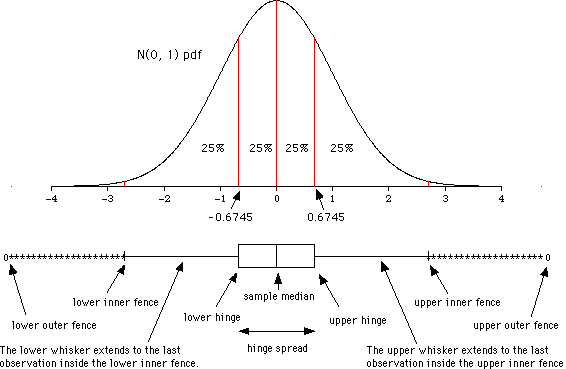

There are several ways to test whether a sample data follows a normal distribution or not. There are visual methods such as a frequency histograms, boxplot or quartile-quartile charts (qqplot). There are also several tests of statistical significance that return the probability of following the normal distribution.

Normality tests:

Shapiro-Wilk test: shapiro.test()

Kolmogorov-Smirnov

Anderson-Darling test: ad.test()

These tests are available in R included in several packages:

stats (preinstalled)

nortest (requires download and installation)

Let’s look at an example of a normality test using the data from regression.txt. We will create different plots to visualize the distribution of values of the variables. Then we will calculate some of the tests above mentioned.

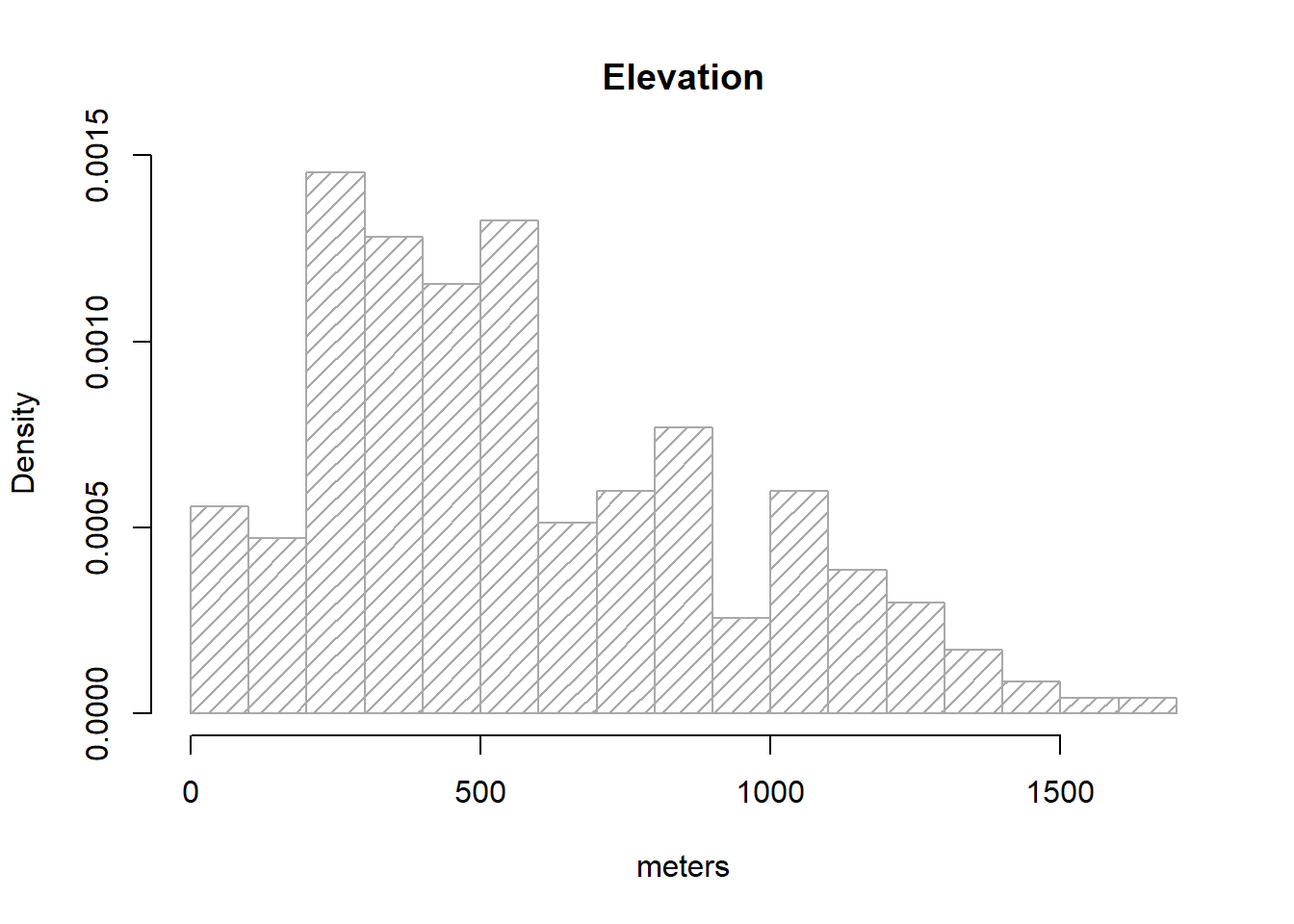

We are already familiar with this kind of plot so let’s see how to use it to find out the distribution of our elevation variable in regression.txt:

regression <- read.table(

'./data/Module_2/regression.txt',

header = TRUE,

sep = '\t',

dec = ','

)

hist(

regression$elevation,

breaks = 15,

density = 15,

freq = FALSE,

col = "darkgray",

xlab = "meters",

main = "Elevation"

)

Note that I brought a couple of new arguments on hist().density=15 changes the solid filled bars with texture lines (this is just cosmetic). freq=FALSE allows expressing the y-axis in terms of probability rather than counts/frequency, which is needed to overlay the normal distribution line of our data. Other than that everything remains the same.

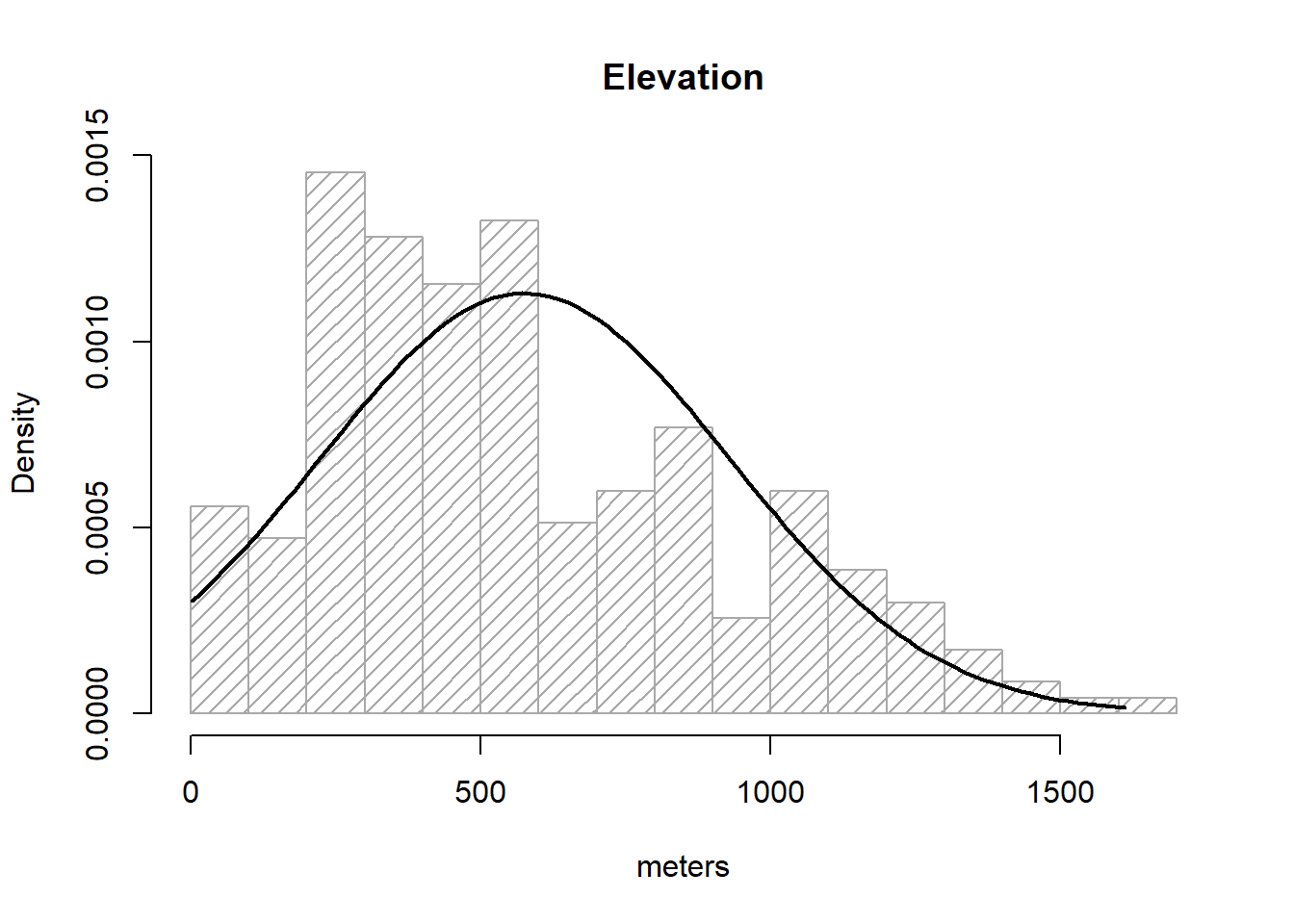

As we know, a normal distribution is characterized by a mean μ and a standard deviation δ. Since we can obtain that information from our elevation column:

So, to overlay the theoretical normal distribution line of our data we create a sequence of x-values (see seq()16) and then fit the expected y-value using dnorm, a command that allows to build the normal y-values sequence.

g <- regression$elevation

h <- hist(

regression$elevation,

breaks = 15,

density = 15,

freq = FALSE,

col = "darkgray",

xlab = "meters",

main = "Elevation"

)

xfit <- seq(min(g), max(g), length = 100)

yfit <- dnorm(xfit, mean = mean(g), sd = sd(g))

lines(xfit, yfit, col = "black",lwd = 2)

So, what we did above is:

Save elevationdata in g, just to avoid excessive typing.

Create our histogram and save it to an object called h17.

Create the a vector xfit from the minimum value of elevationto the maximum.

Use dnorm() to obtain the y values and save it into yfit.

Overlay our theoretical line resulting from xfit-yfit.

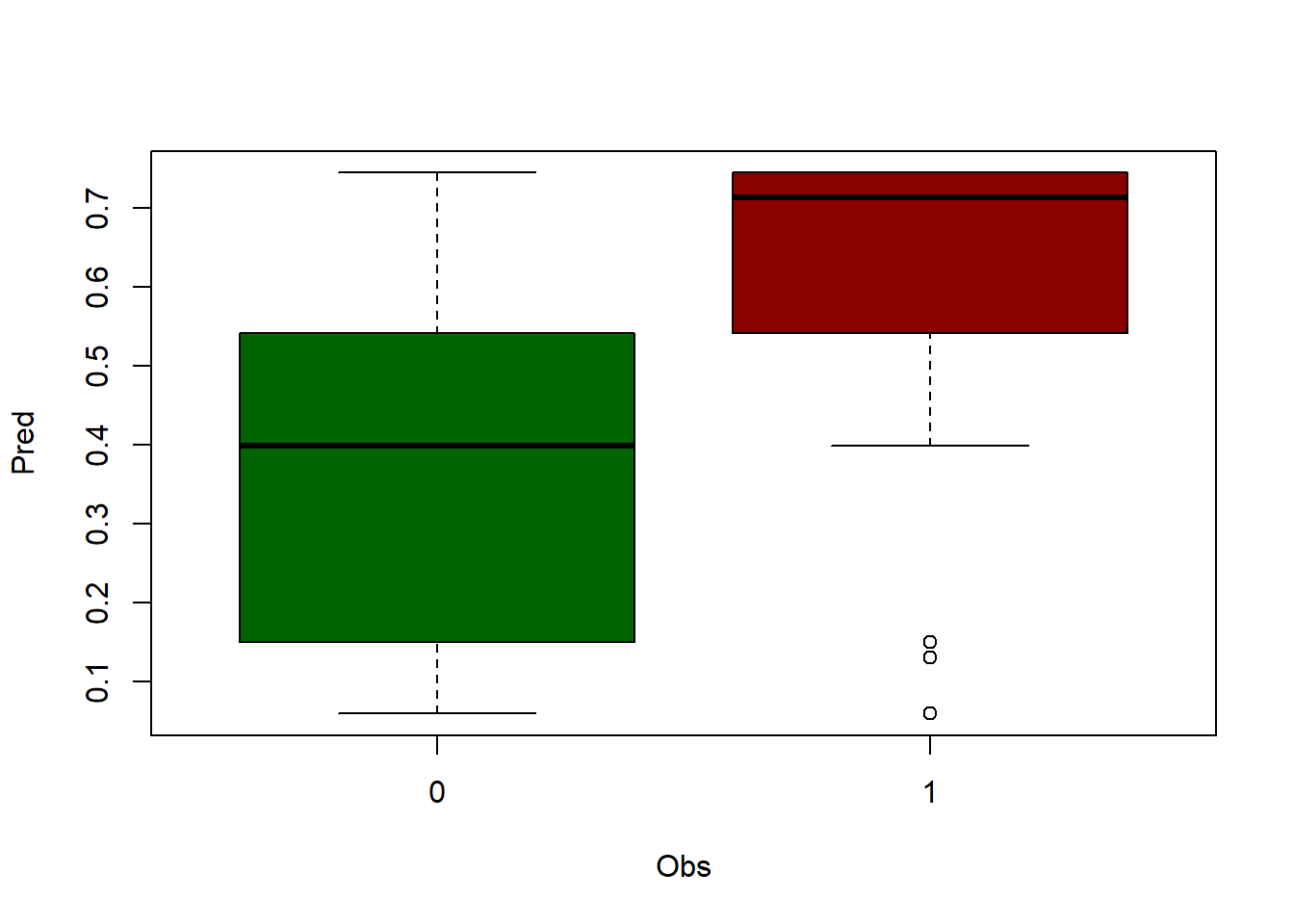

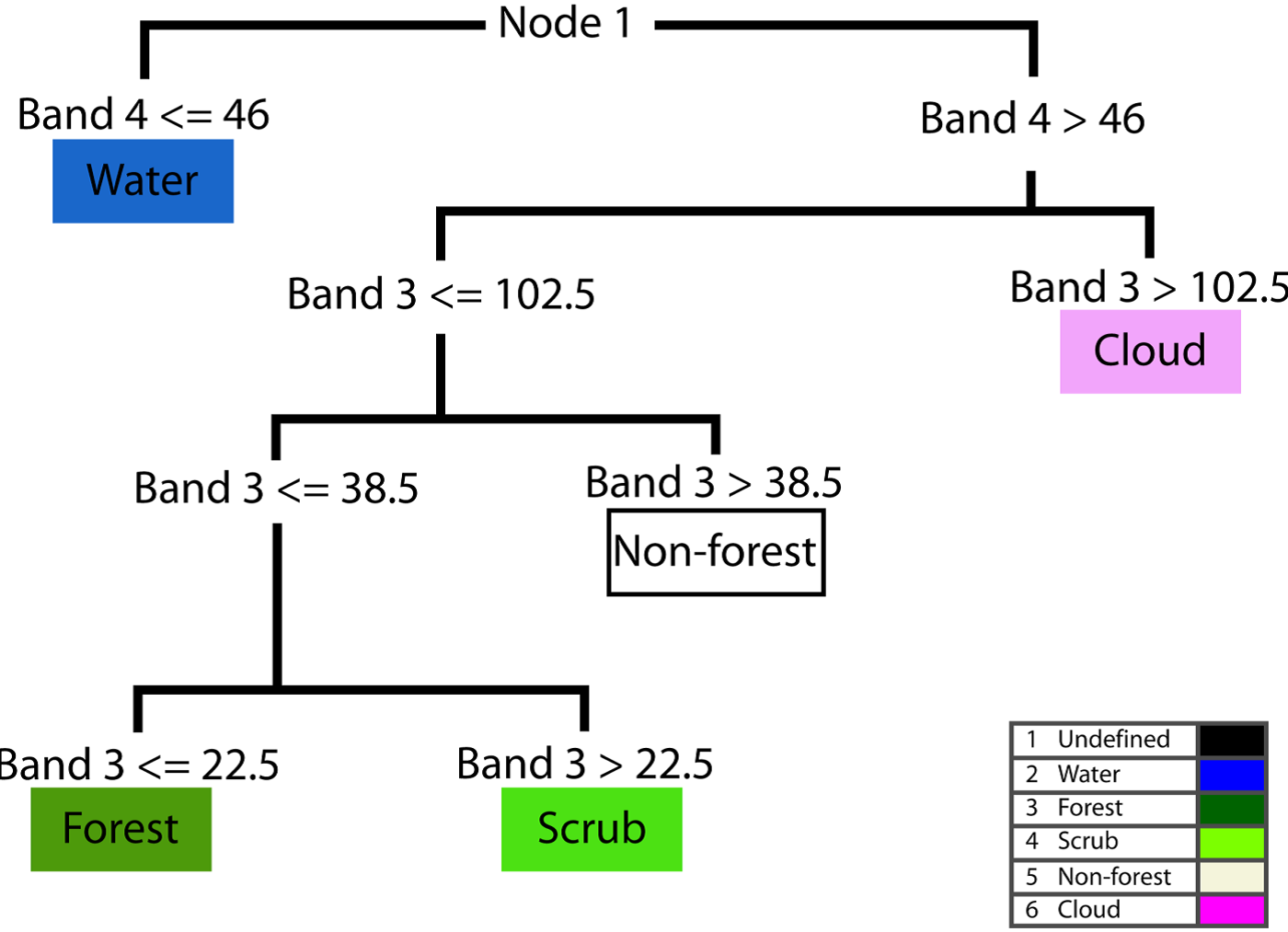

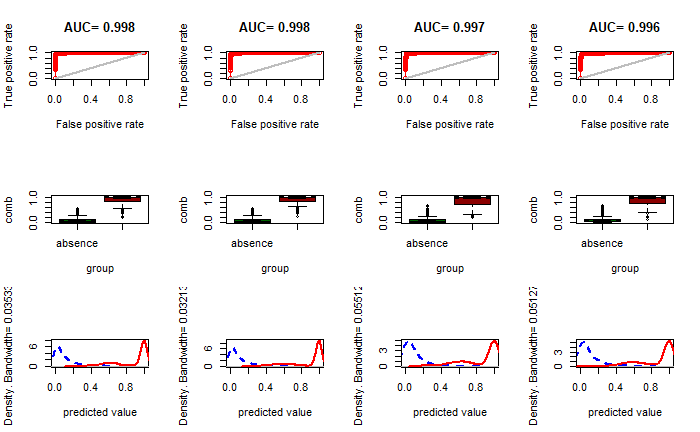

If the bar plot resembles the line then we can consider our data as normal. I am aware this is not fancy at all. We’ll see some other options.